k8s自定義controller設計與實現

阿新 • • 發佈:2021-03-03

## k8s自定義controller設計與實現

### 建立CRD

1. 登入可以執行kubectl命令的機器,建立student.yaml

```yaml

apiVersion: apiextensions.k8s.io/v1beta1

kind: CustomResourceDefinition

metadata:

# metadata.name的內容是由"複數名.分組名"構成,如下,students是複數名,bolingcavalry.k8s.io是分組名

name: students.bolingcavalry.k8s.io

spec:

# 分組名,在REST API中也會用到的,格式是: /apis/分組名/CRD版本

group: bolingcavalry.k8s.io

# list of versions supported by this CustomResourceDefinition

versions:

- name: v1

# 是否有效的開關.

served: true

# 只有一個版本能被標註為storage

storage: true

# 範圍是屬於namespace的

scope: Namespaced

names:

# 複數名

plural: students

# 單數名

singular: student

# 型別名

kind: Student

# 簡稱,就像service的簡稱是svc

shortNames:

- stu

```

2. 在student.yaml所在目錄執行命令kubectl apply -f student.yaml,即可在k8s環境建立Student的定義,今後如果發起對型別為Student的物件的處理,k8s的api server就能識別到該物件型別了

#### 建立Student物件

前面的步驟使得k8s能識別Student型別了,接下來建立Students物件

1. 建立object-student.yaml檔案

```yaml

apiVersion: bolingcavalry.k8s.io/v1

kind: Student

metadata:

name: object-student

spec:

name: "張三"

school: "深圳中學"

```

2. 在object-student.yaml檔案所在目錄執行命令kubectl apply -f object-student.yaml,會看到提示建立成功

3. 執行命令kubectl get stu可見已建立成功的Student物件

至此,自定義API物件(也就是CRD)就建立成功了,此刻我們只是讓k8s能識別到Student這個物件的身份,但是當我們建立Student物件的時候,還沒有觸發任何業務(相對於建立Pod物件的時候,會觸發kubelet在node節點建立docker容器)

### 自動生成程式碼

#### 為什麼要做controller

如果僅僅是在etcd儲存Student物件是沒有什麼意義的,試想通過deployment建立pod時,如果只在etcd建立pod物件,而不去node節點建立容器,那這個pod物件只是一條資料而已,沒有什麼實質性作用,其他物件如service、pv也是如此。

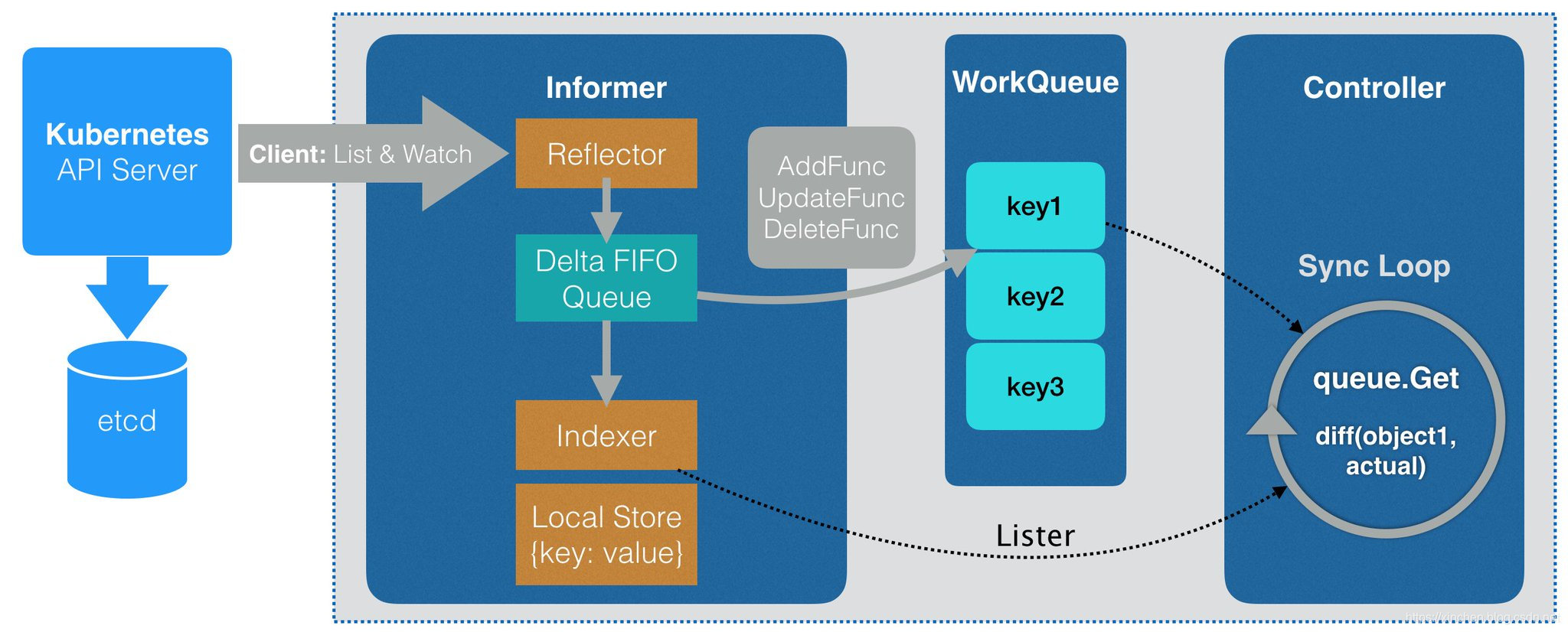

controller的作用就是監聽指定物件的新增、刪除、修改等變化,針對這些變化做出相應的響應(例如新增pod的響應為建立docker容器)

如上圖,API物件的變化會通過Informer存入佇列(WorkQueue),在Controller中消費佇列的資料做出響應,響應相關的具體程式碼就是我們要做的真正業務邏輯。

#### 自動生成程式碼是什麼

從上圖可以發現整個邏輯還是比較複雜的,為了簡化我們的自定義controller開發,k8s的大師們利用自動程式碼生成工具將controller之外的事情都做好了,我們只要專注於controller的開發就好。

#### 開始實戰

接下來要做的事情就是編寫API物件Student相關的宣告的定義程式碼,然後用程式碼生成工具結合這些程式碼,自動生成Client、Informet、WorkQueue相關的程式碼;

1. 在$GOPATH/src目錄下建立一個資料夾k8s_customize_controller

2. 進入資料夾執行如下命令建立三層目錄

```bash

mkdir -p pkg/apis/bolingcavalry

```

3. 在新建的bolingcavalry目錄下建立檔案register.go

```go

package bolingcavalry

const(

GroupName = "bolingcavalry.k8s.io"

Version = "v1"

)

```

4. 在新建的bolingcavalry目錄下建立名為v1的資料夾

5. 在v1資料夾下建立檔案doc.go

```go

package v1

```

上述程式碼中的兩行註釋,都是程式碼生成工具會用到的,一個是宣告為整個v1包下的型別定義生成DeepCopy方法,另一個聲明瞭這個包對應的API的組名,和CRD中的組名一致;

6. 在v1資料夾建立檔案types.go,裡面定義Student物件的具體內容

```go

package v1

import (

metav1 "k8s.io/apimachinery/pkg/apis/meta/v1"

)

// +genclient

// +genclient:noStatus

// +k8s:deepcopy-gen:interfaces=k8s.io/apimachinery/pkg/runtime.Object

type Student struct {

metav1.TypeMeta `json:",inline"`

metav1.ObjectMeta `json:"metadata,omitempty"`

Spec StudentSpec `json:"spec"`

}

type StudentSpec struct {

name string `json:"name"`

school string `json:"school"`

}

// +k8s:deepcopy-gen:interfaces=k8s.io/apimachinery/pkg/runtime.Object

// StudentList is a list of Student resources

type StudentList struct {

metav1.TypeMeta `json:",inline"`

metav1.ListMeta `json:"metadata"`

Items []Student `json:"items"`

}

```

從上述原始碼可見,Student物件的內容已經被設定好,主要有name和school這兩個欄位,表示學生的名字和所在學校,因此建立Student物件的時候內容就要和這裡匹配了;

7. 在v1目錄下建立register.go檔案,此檔案的作用是通過addKnownTypes方法使得client可以知道Student型別的API物件

```go

package v1

import (

metav1 "k8s.io/apimachinery/pkg/apis/meta/v1"

"k8s.io/apimachinery/pkg/runtime"

"k8s.io/apimachinery/pkg/runtime/schema"

"k8s_customize_controller/pkg/apis/bolingcavalry"

)

var SchemeGroupVersion = schema.GroupVersion{

Group: bolingcavalry.GroupName,

Version: bolingcavalry.Version,

}

var (

SchemeBuilder = runtime.NewSchemeBuilder(addKnownTypes)

AddToScheme = SchemeBuilder.AddToScheme

)

func Resource(resource string) schema.GroupResource {

return SchemeGroupVersion.WithResource(resource).GroupResource()

}

func Kind(kind string) schema.GroupKind {

return SchemeGroupVersion.WithKind(kind).GroupKind()

}

func addKnownTypes(scheme *runtime.Scheme) error {

scheme.AddKnownTypes(

SchemeGroupVersion,

&Student{},

&StudentList{},

)

// register the type in the scheme

metav1.AddToGroupVersion(scheme, SchemeGroupVersion)

return nil

}

```

8. 至此,為自動生成程式碼做的準備工作已經完成

9. 執行以下命令,會先下載依賴包,再下載程式碼生成工具,再執行程式碼生成工作:

```bash

cd $GOPATH/src \

&& go get -u -v k8s.io/apimachinery/pkg/apis/meta/v1 \

&& go get -u -v k8s.io/code-generator/... \

&& cd $GOPATH/src/k8s.io/code-generator \

&& ./generate-groups.sh all \

k8s_customize_controller/pkg/client \

k8s_customize_controller/pkg/apis \

bolingcavalry:v1

#如果code-generator安裝失敗(網路原因),可以手動下載程式碼安裝,在執行上面命令

git clone https://github.com/kubernetes/code-generator

./generate-groups.sh all "$ROOT_PACKAGE/pkg/client" "$ROOT_PACKAGE/pkg/apis" "$CUSTOM_RESOURCE_NAME:$CUSTOME_RESOURCE_VERSION"

```

10. 如果程式碼沒問題,會看到以下輸出

```bash

Generating deepcopy funcs

Generating clientset for bolingcavalry:v1 at k8s_customize_controller/pkg/client/clientset

Generating listers for bolingcavalry:v1 at k8s_customize_controller/pkg/client/listers

Generating informers for bolingcavalry:v1 at k8s_customize_controller/pkg/client/informers

```

11. 此時再去$GOPATH/src/k8s_customize_controller目錄下執行tree命令,可見已生成了很多內容

```bash

[root@master k8s_customize_controller]# tree

.

└── pkg

├── apis

│ └── bolingcavalry

│ ├── register.go

│ └── v1

│ ├── doc.go

│ ├── register.go

│ ├── types.go

│ └── zz_generated.deepcopy.go

└── client

├── clientset

│ └── versioned

│ ├── clientset.go

│ ├── doc.go

│ ├── fake

│ │ ├── clientset_generated.go

│ │ ├── doc.go

│ │ └── register.go

│ ├── scheme

│ │ ├── doc.go

│ │ └── register.go

│ └── typed

│ └── bolingcavalry

│ └── v1

│ ├── bolingcavalry_client.go

│ ├── doc.go

│ ├── fake

│ │ ├── doc.go

│ │ ├── fake_bolingcavalry_client.go

│ │ └── fake_student.go

│ ├── generated_expansion.go

│ └── student.go

├── informers

│ └── externalversions

│ ├── bolingcavalry

│ │ ├── interface.go

│ │ └── v1

│ │ ├── interface.go

│ │ └── student.go

│ ├── factory.go

│ ├── generic.go

│ └── internalinterfaces

│ └── factory_interfaces.go

└── listers

└── bolingcavalry

└── v1

├── expansion_generated.go

└── student.go

21 directories, 27 files

```

如上所示,zz_generated.deepcopy.go就是DeepCopy程式碼檔案,client目錄下的內容都是客戶端相關程式碼,在開發controller時會用到;

client目錄下的clientset、informers、listers的身份和作用可以和前面的圖結合來理解

### 編寫controller程式碼

現在已經能監聽到Student物件的增刪改等事件,接下來就是根據這些事件來做不同的事情,滿足個性化的業務需求

1. 編寫的第一個go檔案就是controller.go,在k8s_customize_controller目錄下建立controller.go

```go

package main

import (

"fmt"

"time"

"github.com/golang/glog"

corev1 "k8s.io/api/core/v1"

"k8s.io/apimachinery/pkg/api/errors"

"k8s.io/apimachinery/pkg/util/runtime"

utilruntime "k8s.io/apimachinery/pkg/util/runtime"

"k8s.io/apimachinery/pkg/util/wait"

"k8s.io/client-go/kubernetes"

"k8s.io/client-go/kubernetes/scheme"

typedcorev1 "k8s.io/client-go/kubernetes/typed/core/v1"

"k8s.io/client-go/tools/cache"

"k8s.io/client-go/tools/record"

"k8s.io/client-go/util/workqueue"

bolingcavalryv1 "github.com/zq2599/k8s-controller-custom-resource/pkg/apis/bolingcavalry/v1"

clientset "github.com/zq2599/k8s-controller-custom-resource/pkg/client/clientset/versioned"

studentscheme "github.com/zq2599/k8s-controller-custom-resource/pkg/client/clientset/versioned/scheme"

informers "github.com/zq2599/k8s-controller-custom-resource/pkg/client/informers/externalversions/bolingcavalry/v1"

listers "github.com/zq2599/k8s-controller-custom-resource/pkg/client/listers/bolingcavalry/v1"

)

const controllerAgentName = "student-controller"

const (

SuccessSynced = "Synced"

MessageResourceSynced = "Student synced successfully"

)

// Controller is the controller implementation for Student resources

type Controller struct {

// kubeclientset is a standard kubernetes clientset

kubeclientset kubernetes.Interface

// studentclientset is a clientset for our own API group

studentclientset clientset.Interface

studentsLister listers.StudentLister

studentsSynced cache.InformerSynced

workqueue workqueue.RateLimitingInterface

recorder record.EventRecorder

}

// NewController returns a new student controller

func NewController(

kubeclientset kubernetes.Interface,

studentclientset clientset.Interface,

studentInformer informers.StudentInformer) *Controller {

utilruntime.Must(studentscheme.AddToScheme(scheme.Scheme))

glog.V(4).Info("Creating event broadcaster")

eventBroadcaster := record.NewBroadcaster()

eventBroadcaster.StartLogging(glog.Infof)

eventBroadcaster.StartRecordingToSink(&typedcorev1.EventSinkImpl{Interface: kubeclientset.CoreV1().Events("")})

recorder := eventBroadcaster.NewRecorder(scheme.Scheme, corev1.EventSource{Component: controllerAgentName})

controller := &Controller{

kubeclientset: kubeclientset,

studentclientset: studentclientset,

studentsLister: studentInformer.Lister(),

studentsSynced: studentInformer.Informer().HasSynced,

workqueue: workqueue.NewNamedRateLimitingQueue(workqueue.DefaultControllerRateLimiter(), "Students"),

recorder: recorder,

}

glog.Info("Setting up event handlers")

// Set up an event handler for when Student resources change

studentInformer.Informer().AddEventHandler(cache.ResourceEventHandlerFuncs{

AddFunc: controller.enqueueStudent,

UpdateFunc: func(old, new interface{}) {

oldStudent := old.(*bolingcavalryv1.Student)

newStudent := new.(*bolingcavalryv1.Student)

if oldStudent.ResourceVersion == newStudent.ResourceVersion {

//版本一致,就表示沒有實際更新的操作,立即返回

return

}

controller.enqueueStudent(new)

},

DeleteFunc: controller.enqueueStudentForDelete,

})

return controller

}

//在此處開始controller的業務

func (c *Controller) Run(threadiness int, stopCh <-chan struct{}) error {

defer runtime.HandleCrash()

defer c.workqueue.ShutDown()

glog.Info("開始controller業務,開始一次快取資料同步")

if ok := cache.WaitForCacheSync(stopCh, c.studentsSynced); !ok {

return fmt.Errorf("failed to wait for caches to sync")

}

glog.Info("worker啟動")

for i := 0; i < threadiness; i++ {

go wait.Until(c.runWorker, time.Second, stopCh)

}

glog.Info("worker已經啟動")

<-stopCh

glog.Info("worker已經結束")

return nil

}

func (c *Controller) runWorker() {

for c.processNextWorkItem() {

}

}

// 取資料處理

func (c *Controller) processNextWorkItem() bool {

obj, shutdown := c.workqueue.Get()

if shutdown {

return false

}

// We wrap this block in a func so we can defer c.workqueue.Done.

err := func(obj interface{}) error {

defer c.workqueue.Done(obj)

var key string

var ok bool

if key, ok = obj.(string); !ok {

c.workqueue.Forget(obj)

runtime.HandleError(fmt.Errorf("expected string in workqueue but got %#v", obj))

return nil

}

// 在syncHandler中處理業務

if err := c.syncHandler(key); err != nil {

return fmt.Errorf("error syncing '%s': %s", key, err.Error())

}

c.workqueue.Forget(obj)

glog.Infof("Successfully synced '%s'", key)

return nil

}(obj)

if err != nil {

runtime.HandleError(err)

return true

}

return true

}

// 處理

func (c *Controller) syncHandler(key string) error {

// Convert the namespace/name string into a distinct namespace and name

namespace, name, err := cache.SplitMetaNamespaceKey(key)

if err != nil {

runtime.HandleError(fmt.Errorf("invalid resource key: %s", key))

return nil

}

// 從快取中取物件

student, err := c.studentsLister.Students(namespace).Get(name)

if err != nil {

// 如果Student物件被刪除了,就會走到這裡,所以應該在這裡加入執行

if errors.IsNotFound(err) {

glog.Infof("Student物件被刪除,請在這裡執行實際的刪除業務: %s/%s ...", namespace, name)

return nil

}

runtime.HandleError(fmt.Errorf("failed to list student by: %s/%s", namespace, name))

return err

}

glog.Infof("這裡是student物件的期望狀態: %#v ...", student)

glog.Infof("實際狀態是從業務層面得到的,此處應該去的實際狀態,與期望狀態做對比,並根據差異做出響應(新增或者刪除)")

c.recorder.Event(student, corev1.EventTypeNormal, SuccessSynced, MessageResourceSynced)

return nil

}

// 資料先放入快取,再入佇列

func (c *Controller) enqueueStudent(obj interface{}) {

var key string

var err error

// 將物件放入快取

if key, err = cache.MetaNamespaceKeyFunc(obj); err != nil {

runtime.HandleError(err)

return

}

// 將key放入佇列

c.workqueue.AddRateLimited(key)

}

// 刪除操作

func (c *Controller) enqueueStudentForDelete(obj interface{}) {

var key string

var err error

// 從快取中刪除指定物件

key, err = cache.DeletionHandlingMetaNamespaceKeyFunc(obj)

if err != nil {

runtime.HandleError(err)

return

}

//再將key放入佇列

c.workqueue.AddRateLimited(key)

}

```

上述程式碼有以下幾處關鍵點:

a. 建立controller的NewController方法中,定義了收到Student物件的增刪改訊息時的具體處理邏輯,除了同步本地快取,就是將該物件的key放入訊息中;

b. 實際處理訊息的方法是syncHandler,這裡面可以新增實際的業務程式碼,來響應Student物件的增刪改情況,達到業務目的;

2. 接下來可以寫main.go了,不過在此之前把處理系統訊號量的輔助類先寫好,然後在main.go中會用到(處理例如ctrl+c的退出),在$GOPATH/src/k8s_customize_controller/pkg目錄下新建目錄signals;

3. 在signals目錄下新建檔案signal_posix.go:

```go

// +build !windows

package signals

import (

"os"

"syscall"

)

var shutdownSignals = []os.Signal{os.Interrupt, syscall.SIGTERM}

```

4. 在signals目錄下新建檔案signal_windows.go

```go

package signals

import (

"os"

)

var shutdownSignals = []os.Signal{os.Interrupt}

```

5. 在signals目錄下新建檔案signal.go

```go

package signals

import (

"os"

"os/signal"

)

var onlyOneSignalHandler = make(chan struct{})

func SetupSignalHandler() (stopCh <-chan struct{}) {

close(onlyOneSignalHandler) // panics when called twice

stop := make(chan struct{})

c := make(chan os.Signal, 2)

signal.Notify(c, shutdownSignals...)

go func() {

<-c

close(stop)

<-c

os.Exit(1) // second signal. Exit directly.

}()

return stop

}

```

6. 接下來可以編寫main.go了,在k8s_customize_controller目錄下建立main.go檔案,內容如下,關鍵位置已經加了註釋,就不再贅述了:

```go

package main

import (

"flag"

"time"

"github.com/golang/glog"

"k8s.io/client-go/kubernetes"

"k8s.io/client-go/tools/clientcmd"

// Uncomment the following line to load the gcp plugin (only required to authenticate against GKE clusters).

// _ "k8s.io/client-go/plugin/pkg/client/auth/gcp"

clientset "k8s_customize_controller/pkg/client/clientset/versioned"

informers "k8s_customize_controller/pkg/client/informers/externalversions"

"k8s_customize_controller/pkg/signals"

)

var (

masterURL string

kubeconfig string

)

func main() {

flag.Parse()

// 處理訊號量

stopCh := signals.SetupSignalHandler()

// 處理入參

cfg, err := clientcmd.BuildConfigFromFlags(masterURL, kubeconfig)

if err != nil {

glog.Fatalf("Error building kubeconfig: %s", err.Error())

}

kubeClient, err := kubernetes.NewForConfig(cfg)

if err != nil {

glog.Fatalf("Error building kubernetes clientset: %s", err.Error())

}

studentClient, err := clientset.NewForConfig(cfg)

if err != nil {

glog.Fatalf("Error building example clientset: %s", err.Error())

}

studentInformerFactory := informers.NewSharedInformerFactory(studentClient, time.Second*30)

//得到controller

controller := NewController(kubeClient, studentClient,

studentInformerFactory.Bolingcavalry().V1().Students())

//啟動informer

go studentInformerFactory.Start(stopCh)

//controller開始處理訊息

if err = controller.Run(2, stopCh); err != nil {

glog.Fatalf("Error running controller: %s", err.Error())

}

}

func init() {

flag.StringVar(&kubeconfig, "kubeconfig", "", "Path to a kubeconfig. Only required if out-of-cluster.")

flag.StringVar(&masterURL, "master", "", "The address of the Kubernetes API server. Overrides any value in kubeconfig. Only required if out-of-cluster.")

}

```

至此,所有程式碼已經編寫完畢,接下來是編譯構建

### 編譯構建和啟動

1. 在$GOPATH/src/k8s_customize_controller目錄下,執行以下命令:

```bash

go get k8s.io/client-go/kubernetes/scheme \

&& go get github.com/golang/glog \

&& go get k8s.io/kube-openapi/pkg/util/proto \

&& go get k8s.io/utils/buffer \

&& go get k8s.io/utils/integer \

&& go get k8s.io/utils/trace

```

2. 上述指令碼將編譯過程中依賴的庫通過go get方式進行獲取,屬於笨辦法,更好的方法是選用一種包依賴工具,具體的可以參照k8s的官方demo,這個程式碼中同時提供了godep和vendor兩種方式來處理上面的包依賴問題,地址是:https

3. 解決了包依賴問題後,在$GOPATH/src/k8s_customize_controller目錄下執行命令go build,即可在當前目錄生成k8s_customize_controller檔案;

4. 將檔案k8s_customize_controller複製到k8s環境中,記得通過chmod a+x命令給其可執行許可權;

5. 執行命令./k8s_customize_controller -kubeconfig=$HOME/.kube/config -alsologtostderr=true,會立即啟動controller

### 總結

現在小結一下自定義controller開發的整個過程:

1. 建立CRD(Custom Resource Definition),令k8s明白我們自定義的API物件;

2. 編寫程式碼,將CRD的情況寫入對應的程式碼中,然後通過自動程式碼生成工具,將controller之外的informer,client等內容較為固定的程式碼通過工具生成;

3. 編寫controller,在裡面判斷實際情況是否達到了API物件的宣告情況,如果未達到,就要進行實際業務處理,而這也是controller的通用做法;

4. 實際編碼過程並不負載,動手編寫的檔案如下:

```bash

├── controller.go

├── main.go

└── pkg

├── apis

│ └── bolingcavalry

│ ├── register.go

│ └── v1

│ ├── doc.go

│ ├── register.go

│ └── types.go

└── signals

├── signal.go

├── signal_posix.go

└── signal_windows.go

```

原文連結:https://blog.csdn.net/boling_cavalry/article/details/