kubernetes 原始碼分析之ingress(一)

kubernetes的服務對外暴露通常有三種方式分別為nodeport、loadbalancer和ingress。nodeport很容易理解就是在每個主機上面啟動一個服務埠暴露出去,這樣弊端是造成埠浪費;loadbalancer這種方式目前只能在gce的平臺跑的很好,就是在叢集之外對接雲平臺的負載均衡;還有從kubernetes1.2出來的ingress,他是通過在計算結算啟動一個軟負載均衡(Nginx、Haproxy)轉發流量。

在第一篇我想先介紹安裝使用。

先啟動一個後端服務:

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: default-http 由於我機器有限,我就在指定的節點啟動這個後端服務,這個服務啟動80埠,提供預設的服務連線。驗證一下:

curl 10.254.12.58:80

default backend - 404然後ingress controller,它本質就是一個負載均衡配置器

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: nginx-ingress-controller

labels:

k8s-app: nginx-ingress-controller

namespace: kube-system

spec:

replicas: 1

template:

metadata:

labels:

k8s-app: nginx-ingress-controller

annotations:

prometheus.io/port: '10254'

prometheus.io/scrape: 'true'

spec:

terminationGracePeriodSeconds: 60

nodeName: slave1

containers:

- image: docker.io/chancefocus/nginx-ingress-controller

imagePullPolicy: IfNotPresent

name: nginx-ingress-controller

readinessProbe:

httpGet:

path: /healthz

port: 10254

scheme: HTTP

livenessProbe:

httpGet:

path: /healthz

port: 10254

scheme: HTTP

initialDelaySeconds: 10

timeoutSeconds: 1

ports:

- containerPort: 80

hostPort: 80

- containerPort: 443

hostPort: 443

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

args:

- /nginx-ingress-controller

- --default-backend-service=kube-system/default-http-backend

- --apiserver-host=http://10.39.0.6:8080ingress-controller會同步k8s的資源修改負載均衡規則,然後就可以建立一個負載均衡規則了

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: test

namespace: kube-system

annotations:

ingress.kubernetes.io/force-ssl-redirect: "false"

ingress.kubernetes.io/ssl-redirect: "false"

spec:

rules:

- http:

paths:

- path: /api

backend:

serviceName: heapster

servicePort: 80把/api的請求轉發到heapster這個service裡面。其中和http平級的還有一個引數

- host: xx.xxx.xxx,註明域名

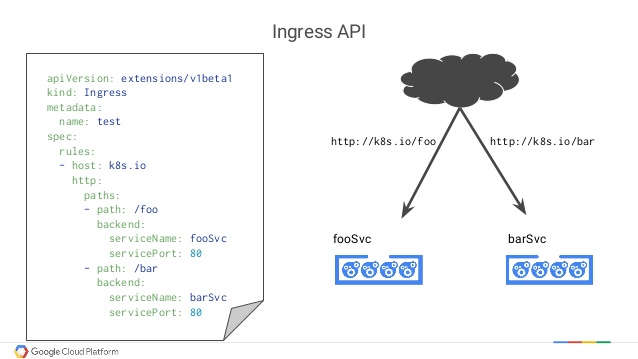

下面是來自google的解說圖片

下面就可以測試了:

首先確認服務是ok的,10.254.51.153是clusterIP,192.168.90.6是容器的IP

curl http://10.254.51.153/api/v1/model/namespaces/default/pods/busybox-1865195333-zkwtt/containers/busybox/metrics/cpu/usage

{

"metrics": [

{

"timestamp": "2017-04-20T02:20:00Z",

"value": 7412767

},

{

"timestamp": "2017-04-20T02:21:00Z",

"value": 7412767

},

{

"timestamp": "2017-04-20T02:22:00Z",

"value": 7412767

}

],

"latestTimestamp": "2017-04-20T02:22:00Z"

}

curl http://192.168.90.6:8082/api/v1/model/namespaces/default/pods/busybox-1865195333-zkwtt/containers/busybox/metrics/cpu/usage

{

"metrics": [

{

"timestamp": "2017-04-20T02:44:00Z",

"value": 8960064

},

{

"timestamp": "2017-04-20T02:45:00Z",

"value": 8960064

},

{

"timestamp": "2017-04-20T02:46:00Z",

"value": 8960064

}

],

"latestTimestamp": "2017-04-20T02:46:00Z"

}然後在任何一臺機器上面的都可以通過slave1上面的80埠訪問服務了

curl http://10.39.0.17/api/v1/model/namespaces/default/pods/busybox-1865195333-zkwtt/containers/busybox/metrics/cpu/usage

{

"metrics": [

{

"timestamp": "2017-04-20T02:42:00Z",

"value": 8960064

},

{

"timestamp": "2017-04-20T02:43:00Z",

"value": 8960064

}

],

"latestTimestamp": "2017-04-20T02:43:00Z"

}這兒埠80就可以重用了。再進入容器看看,nginx.conf檔案:

upstream kube-system-heapster-80 {

least_conn;

server 192.168.90.6:8082 max_fails=0 fail_timeout=0;

}

upstream upstream-default-backend {

least_conn;

server 192.168.90.2:8080 max_fails=0 fail_timeout=0;

}

location /api {

set $proxy_upstream_name "kube-system-heapster-80";

location / {

set $proxy_upstream_name "upstream-default-backend";

可以看到其實就是修改了Nginx的配置達到服務轉發的效果。ok,基本按照使用已經瞭解了,其中有個細節就是為啥轉發upstream是的容器地址而不是service的地址,有兩個主要原因:第一,多次轉發降低效率,第二,為了保持session,如果通過service會做輪訓,無法保持session。接下來的blog將進行程式碼詳細講解。