FFmpeg的H.264解碼器原始碼簡單分析:解碼器主幹部分

=====================================================

H.264原始碼分析文章列表:

【編碼 - x264】

【解碼 - libavcodec H.264 解碼器】

=====================================================

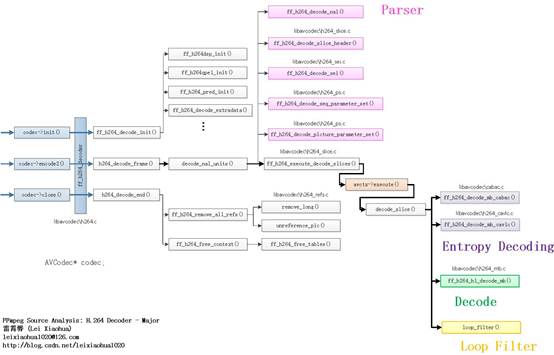

本文分析FFmpeg的H.264解碼器的主幹部分。“主幹部分”是相對於“熵解碼”、“巨集塊解碼”、“環路濾波”這些細節部分而言的。它包含了H.264解碼器直到decode_slice()前面的函式呼叫關係(decode_slice()後面就是H.264解碼器的細節部分,主要包含了“熵解碼”、“巨集塊解碼”、“環路濾波”3個部分)。

函式呼叫關係圖

解碼器主幹部分的原始碼在整個H.264解碼器中的位置如下圖所示。

解碼器主幹部分的原始碼的呼叫關係如下圖所示。

從圖中可以看出,H.264解碼器(Decoder)在初始化的時候呼叫了ff_h264_decode_init(),ff_h264_decode_init()又呼叫了下面幾個函式進行解碼器彙編函式的初始化工作(僅舉了幾個例子):ff_h264dsp_init():初始化DSP相關的彙編函式。包含了IDCT、環路濾波函式等。H.264解碼器在關閉的時候呼叫了h264_decode_end(),h264_decode_end()又呼叫了ff_h264_remove_all_refs(),ff_h264_free_context()等幾個函式進行清理工作。

ff_h264qpel_init():初始化四分之一畫素運動補償相關的彙編函式。

ff_h264_pred_init():初始化幀內預測相關的彙編函式。

H.264解碼器在解碼影象幀的時候呼叫了h264_decode_frame(),h264_decode_frame()呼叫了decode_nal_units(),decode_nal_units()呼叫了兩類函式——解析函式和解碼函式,如下所示。

(1)解析函式(獲取資訊):

ff_h264_decode_nal():解析NALU Header。

ff_h264_decode_seq_parameter_set():解析SPS。

ff_h264_decode_picture_parameter_set():解析PPS。

ff_h264_decode_sei():解析SEI。

ff_h264_decode_slice_header():解析Slice Header。

ff_h264_execute_decode_slices():解碼Slice。其中ff_h264_execute_decode_slices()呼叫了decode_slice(),而decode_slice()中呼叫瞭解碼器中細節處理的函式(暫不詳細分析):

ff_h264_decode_mb_cabac():CABAC熵解碼函式。本文針對H.264解碼器decode_slice()前面的函式呼叫關係進行分析。

ff_h264_decode_mb_cavlc():CAVLC熵解碼函式。

ff_h264_hl_decode_mb():巨集塊解碼函式。

loop_filter():環路濾波函式。

ff_h264_decoder

AVCodec ff_h264_decoder = {

.name = "h264",

.long_name = NULL_IF_CONFIG_SMALL("H.264 / AVC / MPEG-4 AVC / MPEG-4 part 10"),

.type = AVMEDIA_TYPE_VIDEO,

.id = AV_CODEC_ID_H264,

.priv_data_size = sizeof(H264Context),

.init = ff_h264_decode_init,

.close = h264_decode_end,

.decode = h264_decode_frame,

.capabilities = /*CODEC_CAP_DRAW_HORIZ_BAND |*/ CODEC_CAP_DR1 |

CODEC_CAP_DELAY | CODEC_CAP_SLICE_THREADS |

CODEC_CAP_FRAME_THREADS,

.flush = flush_dpb,

.init_thread_copy = ONLY_IF_THREADS_ENABLED(decode_init_thread_copy),

.update_thread_context = ONLY_IF_THREADS_ENABLED(ff_h264_update_thread_context),

.profiles = NULL_IF_CONFIG_SMALL(profiles),

.priv_class = &h264_class,

};從ff_h264_decoder的定義可以看出:解碼器初始化的函式指標init()指向ff_h264_decode_init()函式,解碼的函式指標decode()指向h264_decode_frame()函式,解碼器關閉的函式指標close()指向h264_decode_end()函式。

ff_h264_decode_init()

ff_h264_decode_init()用於FFmpeg H.264解碼器的初始化。該函式的定義位於libavcodec\h264.c,如下所示。//H.264解碼器初始化函式

av_cold int ff_h264_decode_init(AVCodecContext *avctx)

{

H264Context *h = avctx->priv_data;

int i;

int ret;

h->avctx = avctx;

//8顏色位深8bit

h->bit_depth_luma = 8;

//1代表是YUV420P

h->chroma_format_idc = 1;

h->avctx->bits_per_raw_sample = 8;

h->cur_chroma_format_idc = 1;

//初始化DSP相關的彙編函式。包含了IDCT、環路濾波函式等

ff_h264dsp_init(&h->h264dsp, 8, 1);

av_assert0(h->sps.bit_depth_chroma == 0);

ff_h264chroma_init(&h->h264chroma, h->sps.bit_depth_chroma);

//初始化四分之一畫素運動補償相關的彙編函式

ff_h264qpel_init(&h->h264qpel, 8);

//初始化幀內預測相關的彙編函式

ff_h264_pred_init(&h->hpc, h->avctx->codec_id, 8, 1);

h->dequant_coeff_pps = -1;

h->current_sps_id = -1;

/* needed so that IDCT permutation is known early */

if (CONFIG_ERROR_RESILIENCE)

ff_me_cmp_init(&h->mecc, h->avctx);

ff_videodsp_init(&h->vdsp, 8);

memset(h->pps.scaling_matrix4, 16, 6 * 16 * sizeof(uint8_t));

memset(h->pps.scaling_matrix8, 16, 2 * 64 * sizeof(uint8_t));

h->picture_structure = PICT_FRAME;

h->slice_context_count = 1;

h->workaround_bugs = avctx->workaround_bugs;

h->flags = avctx->flags;

/* set defaults */

// s->decode_mb = ff_h263_decode_mb;

if (!avctx->has_b_frames)

h->low_delay = 1;

avctx->chroma_sample_location = AVCHROMA_LOC_LEFT;

//初始化熵解碼器

//CAVLC

ff_h264_decode_init_vlc();

//CABAC

ff_init_cabac_states();

//8-bit H264取0, 大於 8-bit H264取1

h->pixel_shift = 0;

h->sps.bit_depth_luma = avctx->bits_per_raw_sample = 8;

h->thread_context[0] = h;

h->outputed_poc = h->next_outputed_poc = INT_MIN;

for (i = 0; i < MAX_DELAYED_PIC_COUNT; i++)

h->last_pocs[i] = INT_MIN;

h->prev_poc_msb = 1 << 16;

h->prev_frame_num = -1;

h->x264_build = -1;

h->sei_fpa.frame_packing_arrangement_cancel_flag = -1;

ff_h264_reset_sei(h);

if (avctx->codec_id == AV_CODEC_ID_H264) {

if (avctx->ticks_per_frame == 1) {

if(h->avctx->time_base.den < INT_MAX/2) {

h->avctx->time_base.den *= 2;

} else

h->avctx->time_base.num /= 2;

}

avctx->ticks_per_frame = 2;

}

//AVCodecContext中是否包含extradata?包含的話,則解析之

if (avctx->extradata_size > 0 && avctx->extradata) {

ret = ff_h264_decode_extradata(h, avctx->extradata, avctx->extradata_size);

if (ret < 0) {

ff_h264_free_context(h);

return ret;

}

}

if (h->sps.bitstream_restriction_flag &&

h->avctx->has_b_frames < h->sps.num_reorder_frames) {

h->avctx->has_b_frames = h->sps.num_reorder_frames;

h->low_delay = 0;

}

avctx->internal->allocate_progress = 1;

ff_h264_flush_change(h);

return 0;

}

從函式定義中可以看出,ff_h264_decode_init()一方面給H.264 解碼器中一些變數(例如bit_depth_luma、chroma_format_idc等)設定了初始值,另一方面呼叫了一系列彙編函式的初始化函式(初始化函式的具體內容在後續文章中完成)。初始化彙編函式的的步驟是:首先將C語言版本函式賦值給相應模組的函式指標;然後檢測平臺的特性,如果不支援彙編優化(ARM、X86等),則不再做任何處理,如果支援彙編優化,則將相應的彙編優化函式賦值給相應模組的函式指標(替換掉C語言版本的效率較低的函式)。下面幾個函式初始化了幾個不同模組的彙編優化函式:

ff_h264dsp_init():初始化DSP相關的彙編函式。包含了IDCT、環路濾波函式等。

ff_h264qpel_init():初始化四分之一畫素運動補償相關的彙編函式。

ff_h264_pred_init():初始化幀內預測相關的彙編函式。

可以舉例看一下個ff_h264_pred_init()的程式碼。

ff_h264_pred_init()

函式用於初始化幀內預測相關的彙編函式,定位於libavcodec\h264pred.c,如下所示。/**

* Set the intra prediction function pointers.

*/

//初始化幀內預測相關的彙編函式

av_cold void ff_h264_pred_init(H264PredContext *h, int codec_id,

const int bit_depth,

int chroma_format_idc)

{

#undef FUNC

#undef FUNCC

#define FUNC(a, depth) a ## _ ## depth

#define FUNCC(a, depth) a ## _ ## depth ## _c

#define FUNCD(a) a ## _c

//好長的巨集定義...(這種很長的巨集定義在H.264解碼器中似乎很普遍!)

//該巨集用於給幀內預測模組的函式指標賦值

//注意引數為顏色位深度

#define H264_PRED(depth) \

if(codec_id != AV_CODEC_ID_RV40){\

if (codec_id == AV_CODEC_ID_VP7 || codec_id == AV_CODEC_ID_VP8) {\

h->pred4x4[VERT_PRED ]= FUNCD(pred4x4_vertical_vp8);\

h->pred4x4[HOR_PRED ]= FUNCD(pred4x4_horizontal_vp8);\

} else {\

h->pred4x4[VERT_PRED ]= FUNCC(pred4x4_vertical , depth);\

h->pred4x4[HOR_PRED ]= FUNCC(pred4x4_horizontal , depth);\

}\

h->pred4x4[DC_PRED ]= FUNCC(pred4x4_dc , depth);\

if(codec_id == AV_CODEC_ID_SVQ3)\

h->pred4x4[DIAG_DOWN_LEFT_PRED ]= FUNCD(pred4x4_down_left_svq3);\

else\

h->pred4x4[DIAG_DOWN_LEFT_PRED ]= FUNCC(pred4x4_down_left , depth);\

h->pred4x4[DIAG_DOWN_RIGHT_PRED]= FUNCC(pred4x4_down_right , depth);\

h->pred4x4[VERT_RIGHT_PRED ]= FUNCC(pred4x4_vertical_right , depth);\

h->pred4x4[HOR_DOWN_PRED ]= FUNCC(pred4x4_horizontal_down , depth);\

if (codec_id == AV_CODEC_ID_VP7 || codec_id == AV_CODEC_ID_VP8) {\

h->pred4x4[VERT_LEFT_PRED ]= FUNCD(pred4x4_vertical_left_vp8);\

} else\

h->pred4x4[VERT_LEFT_PRED ]= FUNCC(pred4x4_vertical_left , depth);\

h->pred4x4[HOR_UP_PRED ]= FUNCC(pred4x4_horizontal_up , depth);\

if (codec_id != AV_CODEC_ID_VP7 && codec_id != AV_CODEC_ID_VP8) {\

h->pred4x4[LEFT_DC_PRED ]= FUNCC(pred4x4_left_dc , depth);\

h->pred4x4[TOP_DC_PRED ]= FUNCC(pred4x4_top_dc , depth);\

} else {\

h->pred4x4[TM_VP8_PRED ]= FUNCD(pred4x4_tm_vp8);\

h->pred4x4[DC_127_PRED ]= FUNCC(pred4x4_127_dc , depth);\

h->pred4x4[DC_129_PRED ]= FUNCC(pred4x4_129_dc , depth);\

h->pred4x4[VERT_VP8_PRED ]= FUNCC(pred4x4_vertical , depth);\

h->pred4x4[HOR_VP8_PRED ]= FUNCC(pred4x4_horizontal , depth);\

}\

if (codec_id != AV_CODEC_ID_VP8)\

h->pred4x4[DC_128_PRED ]= FUNCC(pred4x4_128_dc , depth);\

}else{\

h->pred4x4[VERT_PRED ]= FUNCC(pred4x4_vertical , depth);\

h->pred4x4[HOR_PRED ]= FUNCC(pred4x4_horizontal , depth);\

h->pred4x4[DC_PRED ]= FUNCC(pred4x4_dc , depth);\

h->pred4x4[DIAG_DOWN_LEFT_PRED ]= FUNCD(pred4x4_down_left_rv40);\

h->pred4x4[DIAG_DOWN_RIGHT_PRED]= FUNCC(pred4x4_down_right , depth);\

h->pred4x4[VERT_RIGHT_PRED ]= FUNCC(pred4x4_vertical_right , depth);\

h->pred4x4[HOR_DOWN_PRED ]= FUNCC(pred4x4_horizontal_down , depth);\

h->pred4x4[VERT_LEFT_PRED ]= FUNCD(pred4x4_vertical_left_rv40);\

h->pred4x4[HOR_UP_PRED ]= FUNCD(pred4x4_horizontal_up_rv40);\

h->pred4x4[LEFT_DC_PRED ]= FUNCC(pred4x4_left_dc , depth);\

h->pred4x4[TOP_DC_PRED ]= FUNCC(pred4x4_top_dc , depth);\

h->pred4x4[DC_128_PRED ]= FUNCC(pred4x4_128_dc , depth);\

h->pred4x4[DIAG_DOWN_LEFT_PRED_RV40_NODOWN]= FUNCD(pred4x4_down_left_rv40_nodown);\

h->pred4x4[HOR_UP_PRED_RV40_NODOWN]= FUNCD(pred4x4_horizontal_up_rv40_nodown);\

h->pred4x4[VERT_LEFT_PRED_RV40_NODOWN]= FUNCD(pred4x4_vertical_left_rv40_nodown);\

}\

\

h->pred8x8l[VERT_PRED ]= FUNCC(pred8x8l_vertical , depth);\

h->pred8x8l[HOR_PRED ]= FUNCC(pred8x8l_horizontal , depth);\

h->pred8x8l[DC_PRED ]= FUNCC(pred8x8l_dc , depth);\

h->pred8x8l[DIAG_DOWN_LEFT_PRED ]= FUNCC(pred8x8l_down_left , depth);\

h->pred8x8l[DIAG_DOWN_RIGHT_PRED]= FUNCC(pred8x8l_down_right , depth);\

h->pred8x8l[VERT_RIGHT_PRED ]= FUNCC(pred8x8l_vertical_right , depth);\

h->pred8x8l[HOR_DOWN_PRED ]= FUNCC(pred8x8l_horizontal_down , depth);\

h->pred8x8l[VERT_LEFT_PRED ]= FUNCC(pred8x8l_vertical_left , depth);\

h->pred8x8l[HOR_UP_PRED ]= FUNCC(pred8x8l_horizontal_up , depth);\

h->pred8x8l[LEFT_DC_PRED ]= FUNCC(pred8x8l_left_dc , depth);\

h->pred8x8l[TOP_DC_PRED ]= FUNCC(pred8x8l_top_dc , depth);\

h->pred8x8l[DC_128_PRED ]= FUNCC(pred8x8l_128_dc , depth);\

\

if (chroma_format_idc <= 1) {\

h->pred8x8[VERT_PRED8x8 ]= FUNCC(pred8x8_vertical , depth);\

h->pred8x8[HOR_PRED8x8 ]= FUNCC(pred8x8_horizontal , depth);\

} else {\

h->pred8x8[VERT_PRED8x8 ]= FUNCC(pred8x16_vertical , depth);\

h->pred8x8[HOR_PRED8x8 ]= FUNCC(pred8x16_horizontal , depth);\

}\

if (codec_id != AV_CODEC_ID_VP7 && codec_id != AV_CODEC_ID_VP8) {\

if (chroma_format_idc <= 1) {\

h->pred8x8[PLANE_PRED8x8]= FUNCC(pred8x8_plane , depth);\

} else {\

h->pred8x8[PLANE_PRED8x8]= FUNCC(pred8x16_plane , depth);\

}\

} else\

h->pred8x8[PLANE_PRED8x8]= FUNCD(pred8x8_tm_vp8);\

if (codec_id != AV_CODEC_ID_RV40 && codec_id != AV_CODEC_ID_VP7 && \

codec_id != AV_CODEC_ID_VP8) {\

if (chroma_format_idc <= 1) {\

h->pred8x8[DC_PRED8x8 ]= FUNCC(pred8x8_dc , depth);\

h->pred8x8[LEFT_DC_PRED8x8]= FUNCC(pred8x8_left_dc , depth);\

h->pred8x8[TOP_DC_PRED8x8 ]= FUNCC(pred8x8_top_dc , depth);\

h->pred8x8[ALZHEIMER_DC_L0T_PRED8x8 ]= FUNC(pred8x8_mad_cow_dc_l0t, depth);\

h->pred8x8[ALZHEIMER_DC_0LT_PRED8x8 ]= FUNC(pred8x8_mad_cow_dc_0lt, depth);\

h->pred8x8[ALZHEIMER_DC_L00_PRED8x8 ]= FUNC(pred8x8_mad_cow_dc_l00, depth);\

h->pred8x8[ALZHEIMER_DC_0L0_PRED8x8 ]= FUNC(pred8x8_mad_cow_dc_0l0, depth);\

} else {\

h->pred8x8[DC_PRED8x8 ]= FUNCC(pred8x16_dc , depth);\

h->pred8x8[LEFT_DC_PRED8x8]= FUNCC(pred8x16_left_dc , depth);\

h->pred8x8[TOP_DC_PRED8x8 ]= FUNCC(pred8x16_top_dc , depth);\

h->pred8x8[ALZHEIMER_DC_L0T_PRED8x8 ]= FUNC(pred8x16_mad_cow_dc_l0t, depth);\

h->pred8x8[ALZHEIMER_DC_0LT_PRED8x8 ]= FUNC(pred8x16_mad_cow_dc_0lt, depth);\

h->pred8x8[ALZHEIMER_DC_L00_PRED8x8 ]= FUNC(pred8x16_mad_cow_dc_l00, depth);\

h->pred8x8[ALZHEIMER_DC_0L0_PRED8x8 ]= FUNC(pred8x16_mad_cow_dc_0l0, depth);\

}\

}else{\

h->pred8x8[DC_PRED8x8 ]= FUNCD(pred8x8_dc_rv40);\

h->pred8x8[LEFT_DC_PRED8x8]= FUNCD(pred8x8_left_dc_rv40);\

h->pred8x8[TOP_DC_PRED8x8 ]= FUNCD(pred8x8_top_dc_rv40);\

if (codec_id == AV_CODEC_ID_VP7 || codec_id == AV_CODEC_ID_VP8) {\

h->pred8x8[DC_127_PRED8x8]= FUNCC(pred8x8_127_dc , depth);\

h->pred8x8[DC_129_PRED8x8]= FUNCC(pred8x8_129_dc , depth);\

}\

}\

if (chroma_format_idc <= 1) {\

h->pred8x8[DC_128_PRED8x8 ]= FUNCC(pred8x8_128_dc , depth);\

} else {\

h->pred8x8[DC_128_PRED8x8 ]= FUNCC(pred8x16_128_dc , depth);\

}\

\

h->pred16x16[DC_PRED8x8 ]= FUNCC(pred16x16_dc , depth);\

h->pred16x16[VERT_PRED8x8 ]= FUNCC(pred16x16_vertical , depth);\

h->pred16x16[HOR_PRED8x8 ]= FUNCC(pred16x16_horizontal , depth);\

switch(codec_id){\

case AV_CODEC_ID_SVQ3:\

h->pred16x16[PLANE_PRED8x8 ]= FUNCD(pred16x16_plane_svq3);\

break;\

case AV_CODEC_ID_RV40:\

h->pred16x16[PLANE_PRED8x8 ]= FUNCD(pred16x16_plane_rv40);\

break;\

case AV_CODEC_ID_VP7:\

case AV_CODEC_ID_VP8:\

h->pred16x16[PLANE_PRED8x8 ]= FUNCD(pred16x16_tm_vp8);\

h->pred16x16[DC_127_PRED8x8]= FUNCC(pred16x16_127_dc , depth);\

h->pred16x16[DC_129_PRED8x8]= FUNCC(pred16x16_129_dc , depth);\

break;\

default:\

h->pred16x16[PLANE_PRED8x8 ]= FUNCC(pred16x16_plane , depth);\

break;\

}\

h->pred16x16[LEFT_DC_PRED8x8]= FUNCC(pred16x16_left_dc , depth);\

h->pred16x16[TOP_DC_PRED8x8 ]= FUNCC(pred16x16_top_dc , depth);\

h->pred16x16[DC_128_PRED8x8 ]= FUNCC(pred16x16_128_dc , depth);\

\

/* special lossless h/v prediction for h264 */ \

h->pred4x4_add [VERT_PRED ]= FUNCC(pred4x4_vertical_add , depth);\

h->pred4x4_add [ HOR_PRED ]= FUNCC(pred4x4_horizontal_add , depth);\

h->pred8x8l_add [VERT_PRED ]= FUNCC(pred8x8l_vertical_add , depth);\

h->pred8x8l_add [ HOR_PRED ]= FUNCC(pred8x8l_horizontal_add , depth);\

h->pred8x8l_filter_add [VERT_PRED ]= FUNCC(pred8x8l_vertical_filter_add , depth);\

h->pred8x8l_filter_add [ HOR_PRED ]= FUNCC(pred8x8l_horizontal_filter_add , depth);\

if (chroma_format_idc <= 1) {\

h->pred8x8_add [VERT_PRED8x8]= FUNCC(pred8x8_vertical_add , depth);\

h->pred8x8_add [ HOR_PRED8x8]= FUNCC(pred8x8_horizontal_add , depth);\

} else {\

h->pred8x8_add [VERT_PRED8x8]= FUNCC(pred8x16_vertical_add , depth);\

h->pred8x8_add [ HOR_PRED8x8]= FUNCC(pred8x16_horizontal_add , depth);\

}\

h->pred16x16_add[VERT_PRED8x8]= FUNCC(pred16x16_vertical_add , depth);\

h->pred16x16_add[ HOR_PRED8x8]= FUNCC(pred16x16_horizontal_add , depth);\

//注意這裡使用了前面那個很長的巨集定義

//根據顏色位深的不同,初始化不同的函式

//顏色位深預設值為8,所以一般情況下呼叫H264_PRED(8)

switch (bit_depth) {

case 9:

H264_PRED(9)

break;

case 10:

H264_PRED(10)

break;

case 12:

H264_PRED(12)

break;

case 14:

H264_PRED(14)

break;

default:

av_assert0(bit_depth<=8);

H264_PRED(8)

break;

}

//如果支援彙編優化,則會呼叫相應的彙編優化函式

//neon這些的

if (ARCH_ARM) ff_h264_pred_init_arm(h, codec_id, bit_depth, chroma_format_idc);

//mmx這些的

if (ARCH_X86) ff_h264_pred_init_x86(h, codec_id, bit_depth, chroma_format_idc);

}

初看一眼ff_h264_pred_init()定義會給人一種很奇怪的感覺:前面的這個H264_PRED(depth)的巨集定義怎麼這麼長?!實際上在FFmpeg的H.264解碼器中這種很長的巨集定義是很常見的。我個人覺得這麼做主要是為了方便為不同的顏色位深的碼流初始化不同的功能函式。例如,對於常見的8bit碼流,呼叫H264_PRED(8)就可以初始化相應的函式;對於比較新的10bit碼流,呼叫H264_PRED(10)就可以初始化相應的函式。

ff_h264_pred_init()的程式碼是開始於switch()語句的,可以看出該函式根據不同的bit_depth(顏色位深)呼叫了不同的H264_PRED(bit_depth)巨集。我們不妨展開一個H264_PRED()巨集看看裡面的程式碼究竟是什麼。在這裡我們選擇最常見的8bit為例,看看H264_PRED(8)巨集展開後的結果。

H264_PRED(8)

H264_PRED(8)用於初始化8bit顏色位深C語言版本的幀內預測的函式。該巨集定義展開後的結果如下所示。if(codec_id != AV_CODEC_ID_RV40){

if (codec_id == AV_CODEC_ID_VP7 || codec_id == AV_CODEC_ID_VP8) {

h->pred4x4[0 ]= pred4x4_vertical_vp8_c;

h->pred4x4[1 ]= pred4x4_horizontal_vp8_c;

} else {

//幀內4x4的Vertical預測方式

h->pred4x4[0 ]= pred4x4_vertical_8_c;

//幀內4x4的Horizontal預測方式

h->pred4x4[1 ]= pred4x4_horizontal_8_c;

}

//幀內4x4的DC預測方式

h->pred4x4[2 ]= pred4x4_dc_8_c;

if(codec_id == AV_CODEC_ID_SVQ3)

h->pred4x4[3 ]= pred4x4_down_left_svq3_c;

else

h->pred4x4[3 ]= pred4x4_down_left_8_c;

h->pred4x4[4]= pred4x4_down_right_8_c;

h->pred4x4[5 ]= pred4x4_vertical_right_8_c;

h->pred4x4[6 ]= pred4x4_horizontal_down_8_c;

if (codec_id == AV_CODEC_ID_VP7 || codec_id == AV_CODEC_ID_VP8) {

h->pred4x4[7 ]= pred4x4_vertical_left_vp8_c;

} else

h->pred4x4[7 ]= pred4x4_vertical_left_8_c;

h->pred4x4[8 ]= pred4x4_horizontal_up_8_c;

if (codec_id != AV_CODEC_ID_VP7 && codec_id != AV_CODEC_ID_VP8) {

h->pred4x4[9 ]= pred4x4_left_dc_8_c;

h->pred4x4[10 ]= pred4x4_top_dc_8_c;

} else {

h->pred4x4[9 ]= pred4x4_tm_vp8_c;

h->pred4x4[12 ]= pred4x4_127_dc_8_c;

h->pred4x4[13 ]= pred4x4_129_dc_8_c;

h->pred4x4[10 ]= pred4x4_vertical_8_c;

h->pred4x4[14 ]= pred4x4_horizontal_8_c;

}

if (codec_id != AV_CODEC_ID_VP8)

h->pred4x4[11 ]= pred4x4_128_dc_8_c;

}else{

h->pred4x4[0 ]= pred4x4_vertical_8_c;

h->pred4x4[1 ]= pred4x4_horizontal_8_c;

h->pred4x4[2 ]= pred4x4_dc_8_c;

h->pred4x4[3 ]= pred4x4_down_left_rv40_c;

h->pred4x4[4]= pred4x4_down_right_8_c;

h->pred4x4[5 ]= pred4x4_vertical_right_8_c;

h->pred4x4[6 ]= pred4x4_horizontal_down_8_c;

h->pred4x4[7 ]= pred4x4_vertical_left_rv40_c;

h->pred4x4[8 ]= pred4x4_horizontal_up_rv40_c;

h->pred4x4[9 ]= pred4x4_left_dc_8_c;

h->pred4x4[10 ]= pred4x4_top_dc_8_c;

h->pred4x4[11 ]= pred4x4_128_dc_8_c;

h->pred4x4[12]= pred4x4_down_left_rv40_nodown_c;

h->pred4x4[13]= pred4x4_horizontal_up_rv40_nodown_c;

h->pred4x4[14]= pred4x4_vertical_left_rv40_nodown_c;

}

h->pred8x8l[0 ]= pred8x8l_vertical_8_c;

h->pred8x8l[1 ]= pred8x8l_horizontal_8_c;

h->pred8x8l[2 ]= pred8x8l_dc_8_c;

h->pred8x8l[3 ]= pred8x8l_down_left_8_c;

h->pred8x8l[4]= pred8x8l_down_right_8_c;

h->pred8x8l[5 ]= pred8x8l_vertical_right_8_c;

h->pred8x8l[6 ]= pred8x8l_horizontal_down_8_c;

h->pred8x8l[7 ]= pred8x8l_vertical_left_8_c;

h->pred8x8l[8 ]= pred8x8l_horizontal_up_8_c;

h->pred8x8l[9 ]= pred8x8l_left_dc_8_c;

h->pred8x8l[10 ]= pred8x8l_top_dc_8_c;

h->pred8x8l[11 ]= pred8x8l_128_dc_8_c;

if (chroma_format_idc <= 1) {

h->pred8x8[2 ]= pred8x8_vertical_8_c;

h->pred8x8[1 ]= pred8x8_horizontal_8_c;

} else {

h->pred8x8[2 ]= pred8x16_vertical_8_c;

h->pred8x8[1 ]= pred8x16_horizontal_8_c;

}

if (codec_id != AV_CODEC_ID_VP7 && codec_id != AV_CODEC_ID_VP8) {

if (chroma_format_idc <= 1) {

h->pred8x8[3]= pred8x8_plane_8_c;

} else {

h->pred8x8[3]= pred8x16_plane_8_c;

}

} else

h->pred8x8[3]= pred8x8_tm_vp8_c;

if (codec_id != AV_CODEC_ID_RV40 && codec_id != AV_CODEC_ID_VP7 &&

codec_id != AV_CODEC_ID_VP8) {

if (chroma_format_idc <= 1) {

h->pred8x8[0 ]= pred8x8_dc_8_c;

h->pred8x8[4]= pred8x8_left_dc_8_c;

h->pred8x8[5 ]= pred8x8_top_dc_8_c;

h->pred8x8[7 ]= pred8x8_mad_cow_dc_l0t_8;

h->pred8x8[8 ]= pred8x8_mad_cow_dc_0lt_8;

h->pred8x8[9 ]= pred8x8_mad_cow_dc_l00_8;

h->pred8x8[10 ]= pred8x8_mad_cow_dc_0l0_8;

} else {

h->pred8x8[0 ]= pred8x16_dc_8_c;

h->pred8x8[4]= pred8x16_left_dc_8_c;

h->pred8x8[5 ]= pred8x16_top_dc_8_c;

h->pred8x8[7 ]= pred8x16_mad_cow_dc_l0t_8;

h->pred8x8[8 ]= pred8x16_mad_cow_dc_0lt_8;

h->pred8x8[9 ]= pred8x16_mad_cow_dc_l00_8;

h->pred8x8[10 ]= pred8x16_mad_cow_dc_0l0_8;

}

}else{

h->pred8x8[0 ]= pred8x8_dc_rv40_c;

h->pred8x8[4]= pred8x8_left_dc_rv40_c;

h->pred8x8[5 ]= pred8x8_top_dc_rv40_c;

if (codec_id == AV_CODEC_ID_VP7 || codec_id == AV_CODEC_ID_VP8) {

h->pred8x8[7]= pred8x8_127_dc_8_c;

h->pred8x8[8]= pred8x8_129_dc_8_c;

}

}

if (chroma_format_idc <= 1) {

h->pred8x8[6 ]= pred8x8_128_dc_8_c;

} else {

h->pred8x8[6 ]= pred8x16_128_dc_8_c;

}

h->pred16x16[0 ]= pred16x16_dc_8_c;

h->pred16x16[2 ]= pred16x16_vertical_8_c;

h->pred16x16[1 ]= pred16x16_horizontal_8_c;

switch(codec_id){

case AV_CODEC_ID_SVQ3:

h->pred16x16[3 ]= pred16x16_plane_svq3_c;

break;

case AV_CODEC_ID_RV40:

h->pred16x16[3 ]= pred16x16_plane_rv40_c;

break;

case AV_CODEC_ID_VP7:

case AV_CODEC_ID_VP8:

h->pred16x16[3 ]= pred16x16_tm_vp8_c;

h->pred16x16[7]= pred16x16_127_dc_8_c;

h->pred16x16[8]= pred16x16_129_dc_8_c;

break;

default:

h->pred16x16[3 ]= pred16x16_plane_8_c;

break;

}

h->pred16x16[4]= pred16x16_left_dc_8_c;

h->pred16x16[5 ]= pred16x16_top_dc_8_c;

h->pred16x16[6 ]= pred16x16_128_dc_8_c;

/* special lossless h/v prediction for h264 */

h->pred4x4_add [0 ]= pred4x4_vertical_add_8_c;

h->pred4x4_add [ 1 ]= pred4x4_horizontal_add_8_c;

h->pred8x8l_add [0 ]= pred8x8l_vertical_add_8_c;

h->pred8x8l_add [ 1 ]= pred8x8l_horizontal_add_8_c;

h->pred8x8l_filter_add [0 ]= pred8x8l_vertical_filter_add_8_c;

h->pred8x8l_filter_add [ 1 ]= pred8x8l_horizontal_filter_add_8_c;

if (chroma_format_idc <= 1) {

h->pred8x8_add [2]= pred8x8_vertical_add_8_c;

h->pred8x8_add [ 1]= pred8x8_horizontal_add_8_c;

} else {

h->pred8x8_add [2]= pred8x16_vertical_add_8_c;

h->pred8x8_add [ 1]= pred8x16_horizontal_add_8_c;

}

h->pred16x16_add[2]= pred16x16_vertical_add_8_c;

h->pred16x16_add[ 1]= pred16x16_horizontal_add_8_c;

可以看出在H264_PRED(8)展開後的程式碼中,幀內預測模組的函式指標都被賦值以xxxx_8_c()的函式。例如幀內4x4的模式0被賦值以pred4x4_vertical_8_c(),幀內4x4的模式1被賦值以pred4x4_horizontal_8_c(),如下所示。

//幀內4x4的Vertical預測方式

h->pred4x4[0 ]= pred4x4_vertical_8_c;

//幀內4x4的Horizontal預測方式

h->pred4x4[1 ]= pred4x4_horizontal_8_c;在這裡我們可以簡單看一下pred4x4_vertical_8_c()函式。該函式完成了4x4幀內Vertical模式的預測。

pred4x4_vertical_8_c()

pred4x4_vertical_8_c()的定義位於libavcodec\h264pred_template.c,如下所示。//垂直預測

//由上邊畫素推出畫素值

static void pred4x4_vertical_8_c (uint8_t *_src, const uint8_t *topright,

ptrdiff_t _stride)

{

pixel *src = (pixel*)_src;

int stride = _stride>>(sizeof(pixel)-1);

/*

* Vertical預測方式

* |X1 X2 X3 X4

* --+-----------

* |X1 X2 X3 X4

* |X1 X2 X3 X4

* |X1 X2 X3 X4

* |X1 X2 X3 X4

*

*/

//pixel4代表4個畫素值。1個畫素值佔用8bit,4個畫素值佔用32bit。

const pixel4 a= AV_RN4PA(src-stride);

/* 巨集定義展開後:

* const uint32_t a=(((const av_alias32*)(src-stride))->u32);

* 注:av_alias32是一個union型別的變數,儲存4byte的int或者float。

* -stride代表了上一行對應位置的畫素

* 即a取的是上1行畫素的值。

*/

AV_WN4PA(src+0*stride, a);

AV_WN4PA(src+1*stride, a);

AV_WN4PA(src+2*stride, a);

AV_WN4PA(src+3*stride, a);

/* 巨集定義展開後:

* (((av_alias32*)(src+0*stride))->u32 = (a));

* (((av_alias32*)(src+1*stride))->u32 = (a));

* (((av_alias32*)(src+2*stride))->u32 = (a));

* (((av_alias32*)(src+3*stride))->u32 = (a));

* 即a把a的值賦給下面4行。

*/

}

有關pred4x4_vertical_8_c()的程式碼在後續文章中再做詳細分析,在這裡就不再做過多解釋了。

ff_h264_pred_init_x86()

當系統支援ARM彙編優化的時候(ARCH_ ARM取值為1),就會呼叫ff_h264_pred_init_arm()初始化ARM平臺下幀內預測彙編優化的函式;當系統支援X86彙編優化的時候(ARCH_X86取值為1),就會呼叫ff_h264_pred_init_x86()初始化X86平臺下幀內預測彙編優化的函式。在這裡我們簡單看一下ff_h264_pred_init_x86()的定義。ff_h264_pred_init_x86()的定義位於libavcodec\x86\h264_intrapred_init.c,如下所示。

av_cold void ff_h264_pred_init_x86(H264PredContext *h, int codec_id,

const int bit_depth,

const int chroma_format_idc)

{

int cpu_flags = av_get_cpu_flags();

if (bit_depth == 8) {

if (EXTERNAL_MMX(cpu_flags)) {

h->pred16x16[VERT_PRED8x8 ] = ff_pred16x16_vertical_8_mmx;

h->pred16x16[HOR_PRED8x8 ] = ff_pred16x16_horizontal_8_mmx;

if (chroma_format_idc <= 1) {

h->pred8x8 [VERT_PRED8x8 ] = ff_pred8x8_vertical_8_mmx;

h->pred8x8 [HOR_PRED8x8 ] = ff_pred8x8_horizontal_8_mmx;

}

if (codec_id == AV_CODEC_ID_VP7 || codec_id == AV_CODEC_ID_VP8) {

h->pred16x16[PLANE_PRED8x8 ] = ff_pred16x16_tm_vp8_8_mmx;

h->pred8x8 [PLANE_PRED8x8 ] = ff_pred8x8_tm_vp8_8_mmx;

h->pred4x4 [TM_VP8_PRED ] = ff_pred4x4_tm_vp8_8_mmx;

} else {

if (chroma_format_idc <= 1)

h->pred8x8 [PLANE_PRED8x8] = ff_pred8x8_plane_8_mmx;

if (codec_id == AV_CODEC_ID_SVQ3) {

if (cpu_flags & AV_CPU_FLAG_CMOV)

h->pred16x16[PLANE_PRED8x8] = ff_pred16x16_plane_svq3_8_mmx;

} else if (codec_id == AV_CODEC_ID_RV40) {

h->pred16x16[PLANE_PRED8x8] = ff_pred16x16_plane_rv40_8_mmx;

} else {

h->pred16x16[PLANE_PRED8x8] = ff_pred16x16_plane_h264_8_mmx;

}

}

}

if (EXTERNAL_MMXEXT(cpu_flags)) {

h->pred16x16[HOR_PRED8x8 ] = ff_pred16x16_horizontal_8_mmxext;

h->pred16x16[DC_PRED8x8 ] = ff_pred16x16_dc_8_mmxext;

if (chroma_format_idc <= 1)

h->pred8x8[HOR_PRED8x8 ] = ff_pred8x8_horizontal_8_mmxext;

h->pred8x8l [TOP_DC_PRED ] = ff_pred8x8l_top_dc_8_mmxext;

h->pred8x8l [DC_PRED ] = ff_pred8x8l_dc_8_mmxext;

h->pred8x8l [HOR_PRED ] = ff_pred8x8l_horizontal_8_mmxext;

h->pred8x8l [VERT_PRED ] = ff_pred8x8l_vertical_8_mmxext;

h->pred8x8l [DIAG_DOWN_RIGHT_PRED ] = ff_pred8x8l_down_right_8_mmxext;

h->pred8x8l [VERT_RIGHT_PRED ] = ff_pred8x8l_vertical_right_8_mmxext;

h->pred8x8l [HOR_UP_PRED ] = ff_pred8x8l_horizontal_up_8_mmxext;

h->pred8x8l [DIAG_DOWN_LEFT_PRED ] = ff_pred8x8l_down_left_8_mmxext;

h->pred8x8l [HOR_DOWN_PRED ] = ff_pred8x8l_horizontal_down_8_mmxext;

h->pred4x4 [DIAG_DOWN_RIGHT_PRED ] = ff_pred4x4_down_right_8_mmxext;

h->pred4x4 [VERT_RIGHT_PRED ] = ff_pred4x4_vertical_right_8_mmxext;

h->pred4x4 [HOR_DOWN_PRED ] = ff_pred4x4_horizontal_down_8_mmxext;

h->pred4x4 [DC_PRED ] = ff_pred4x4_dc_8_mmxext;

if (codec_id == AV_CODEC_ID_VP7 || codec_id == AV_CODEC_ID_VP8 ||

codec_id == AV_CODEC_ID_H264) {

h->pred4x4 [DIAG_DOWN_LEFT_PRED] = ff_pred4x4_down_left_8_mmxext;

}

if (codec_id == AV_CODEC_ID_SVQ3 || codec_id == AV_CODEC_ID_H264) {

h->pred4x4 [VERT_LEFT_PRED ] = ff_pred4x4_vertical_left_8_mmxext;

}

if (codec_id != AV_CODEC_ID_RV40) {

h->pred4x4 [HOR_UP_PRED ] = ff_pred4x4_horizontal_up_8_mmxext;

}

if (codec_id == AV_CODEC_ID_SVQ3 || codec_id == AV_CODEC_ID_H264) {

if (chroma_format_idc <= 1) {

h->pred8x8[TOP_DC_PRED8x8 ] = ff_pred8x8_top_dc_8_mmxext;

h->pred8x8[DC_PRED8x8 ] = ff_pred8x8_dc_8_mmxext;

}

}

if (codec_id == AV_CODEC_ID_VP7 || codec_id == AV_CODEC_ID_VP8) {

h->pred16x16[PLANE_PRED8x8 ] = ff_pred16x16_tm_vp8_8_mmxext;

h->pred8x8 [DC_PRED8x8 ] = ff_pred8x8_dc_rv40_8_mmxext;

h->pred8x8 [PLANE_PRED8x8 ] = ff_pred8x8_tm_vp8_8_mmxext;

h->pred4x4 [TM_VP8_PRED ] = ff_pred4x4_tm_vp8_8_mmxext;

h->pred4x4 [VERT_PRED ] = ff_pred4x4_vertical_vp8_8_mmxext;

} else {

if (chroma_format_idc <= 1)

h->pred8x8 [PLANE_PRED8x8] = ff_pred8x8_plane_8_mmxext;

if (codec_id == AV_CODEC_ID_SVQ3) {

h->pred16x16[PLANE_PRED8x8 ] = ff_pred16x16_plane_svq3_8_mmxext;

} else if (codec_id == AV_CODEC_ID_RV40) {

h->pred16x16[PLANE_PRED8x8 ] = ff_pred16x16_plane_rv40_8_mmxext;

} else {

h->pred16x16[PLANE_PRED8x8 ] = ff_pred16x16_plane_h264_8_mmxext;

}

}

}

if (EXTERNAL_SSE(cpu_flags)) {

h->pred16x16[VERT_PRED8x8] = ff_pred16x16_vertical_8_sse;

}

if (EXTERNAL_SSE2(cpu_flags)) {

h->pred16x16[DC_PRED8x8 ] = ff_pred16x16_dc_8_sse2;

h->pred8x8l [DIAG_DOWN_LEFT_PRED ] = ff_pred8x8l_down_left_8_sse2;

h->pred8x8l [DIAG_DOWN_RIGHT_PRED ] = ff_pred8x8l_down_right_8_sse2;

h->pred8x8l [VERT_RIGHT_PRED ] = ff_pred8x8l_vertical_right_8_sse2;

h->pred8x8l [VERT_LEFT_PRED ] = ff_pred8x8l_vertical_left_8_sse2;

h->pred8x8l [HOR_DOWN_PRED ] = ff_pred8x8l_horizontal_down_8_sse2;

if (codec_id == AV_CODEC_ID_VP7 || codec_id == AV_CODEC_ID_VP8) {

h->pred16x16[PLANE_PRED8x8 ] = ff_pred16x16_tm_vp8_8_sse2;

h->pred8x8 [PLANE_PRED8x8 ] = ff_pred8x8_tm_vp8_8_sse2;

} else {

if (chroma_format_idc <= 1)

h->pred8x8 [PLANE_PRED8x8] = ff_pred8x8_plane_8_sse2;

if (codec_id == AV_CODEC_ID_SVQ3) {

h->pred16x16[PLANE_PRED8x8] = ff_pred16x16_plane_svq3_8_sse2;

} else if (codec_id == AV_CODEC_ID_RV40) {

h->pred16x16[PLANE_PRED8x8] = ff_pred16x16_plane_rv40_8_sse2;

} else {

h->pred16x16[PLANE_PRED8x8] = ff_pred16x16_plane_h264_8_sse2;

}

}

}

if (EXTERNAL_SSSE3(cpu_flags)) {

h->pred16x16[HOR_PRED8x8 ] = ff_pred16x16_horizontal_8_ssse3;

h->pred16x16[DC_PRED8x8 ] = ff_pred16x16_dc_8_ssse3;

if (chroma_format_idc <= 1)

h->pred8x8 [HOR_PRED8x8 ] = ff_pred8x8_horizontal_8_ssse3;

h->pred8x8l [TOP_DC_PRED ] = ff_pred8x8l_top_dc_8_ssse3;

h->pred8x8l [DC_PRED ] = ff_pred8x8l_dc_8_ssse3;

h->pred8x8l [HOR_PRED ] = ff_pred8x8l_horizontal_8_ssse3;

h->pred8x8l [VERT_PRED ] = ff_pred8x8l_vertical_8_ssse3;

h->pred8x8l [DIAG_DOWN_LEFT_PRED ] = ff_pred8x8l_down_left_8_ssse3;

h->pred8x8l [DIAG_DOWN_RIGHT_PRED ] = ff_pred8x8l_down_right_8_ssse3;

h->pred8x8l [VERT_RIGHT_PRED ] = ff_pred8x8l_vertical_right_8_ssse3;

h->pred8x8l [VERT_LEFT_PRED ] = ff_pred8x8l_vertical_left_8_ssse3;

h->pred8x8l [HOR_UP_PRED ] = ff_pred8x8l_horizontal_up_8_ssse3;

h->pred8x8l [HOR_DOWN_PRED ] = ff_pred8x8l_horizontal_down_8_ssse3;

if (codec_id == AV_CODEC_ID_VP7 || codec_id == AV_CODEC_ID_VP8) {

h->pred8x8 [PLANE_PRED8x8 ] = ff_pred8x8_tm_vp8_8_ssse3;

h->pred4x4 [TM_VP8_PRED ] = ff_pred4x4_tm_vp8_8_ssse3;

} else {

if (chroma_format_idc <= 1)

h->pred8x8 [PLANE_PRED8x8] = ff_pred8x8_plane_8_ssse3;

if (codec_id == AV_CODEC_ID_SVQ3) {

h->pred16x16[PLANE_PRED8x8] = ff_pred16x16_plane_svq3_8_ssse3;

} else if (codec_id == AV_CODEC_ID_RV40) {

h->pred16x16[PLANE_PRED8x8] = ff_pred16x16_plane_rv40_8_ssse3;

} else {

h->pred16x16[PLANE_PRED8x8] = ff_pred16x16_plane_h264_8_ssse3;

}

}

}

} else if (bit_depth == 10) {

if (EXTERNAL_MMXEXT(cpu_flags)) {

h->pred4x4[DC_PRED ] = ff_pred4x4_dc_10_mmxext;

h->pred4x4[HOR_UP_PRED ] = ff_pred4x4_horizontal_up_10_mmxext;

if (chroma_format_idc <= 1)

h->pred8x8[DC_PRED8x8 ] = ff_pred8x8_dc_10_mmxext;

h->pred8x8l[DC_128_PRED ] = ff_pred8x8l_128_dc_10_mmxext;

h->pred16x16[DC_PRED8x8 ] = ff_pred16x16_dc_10_mmxext;

h->pred16x16[TOP_DC_PRED8x8 ] = ff_pred16x16_top_dc_10_mmxext;

h->pred16x16[DC_128_PRED8x8 ] = ff_pred16x16_128_dc_10_mmxext;

h->pred16x16[LEFT_DC_PRED8x8 ] = ff_pred16x16_left_dc_10_mmxext;

h->pred16x16[VERT_PRED8x8 ] = ff_pred16x16_vertical_10_mmxext;

h->pred16x16[HOR_PRED8x8 ] = ff_pred16x16_horizontal_10_mmxext;

}

if (EXTERNAL_SSE2(cpu_flags)) {

h->pred4x4[DIAG_DOWN_LEFT_PRED ] = ff_pred4x4_down_left_10_sse2;

h->pred4x4[DIAG_DOWN_RIGHT_PRED] = ff_pred4x4_down_right_10_sse2;

h->pred4x4[VERT_LEFT_PRED ] = ff_pred4x4_vertical_left_10_sse2;

h->pred4x4[VERT_RIGHT_PRED ] = ff_pred4x4_vertical_right_10_sse2;

h->pred4x4[HOR_DOWN_PRED ] = ff_pred4x4_horizontal_down_10_sse2;

if (chroma_format_idc <= 1) {

h->pred8x8[DC_PRED8x8 ] = ff_pred8x8_dc_10_sse2;

h->pred8x8[TOP_DC_PRED8x8 ] = ff_pred8x8_top_dc_10_sse2;

h->pred8x8[PLANE_PRED8x8 ] = ff_pred8x8_plane_10_sse2;

h->pred8x8[VERT_PRED8x8 ] = ff_pred8x8_vertical_10_sse2;

h->pred8x8[HOR_PRED8x8 ] = ff_pred8x8_horizontal_10_sse2;

}

h->pred8x8l[VERT_PRED ] = ff_pred8x8l_vertical_10_sse2;

h->pred8x8l[HOR_PRED ] = ff_pred8x8l_horizontal_10_sse2;

h->pred8x8l[DC_PRED ] = ff_pred8x8l_dc_10_sse2;

h->pred8x8l[DC_128_PRED ] = ff_pred8x8l_128_dc_10_sse2;

h->pred8x8l[TOP_DC_PRED ] = ff_pred8x8l_top_dc_10_sse2;

h->pred8x8l[DIAG_DOWN_LEFT_PRED ] = ff_pred8x8l_down_left_10_sse2;

h->pred8x8l[DIAG_DOWN_RIGHT_PRED] = ff_pred8x8l_down_right_10_sse2;

h->pred8x8l[VERT_RIGHT_PRED ] = ff_pred8x8l_vertical_right_10_sse2;

h->pred8x8l[HOR_UP_PRED ] = ff_pred8x8l_horizontal_up_10_sse2;

h->pred16x16[DC_PRED8x8 ] = ff_pred16x16_dc_10_sse2;

h->pred16x16[TOP_DC_PRED8x8 ] = ff_pred16x16_top_dc_10_sse2;

h->pred16x16[DC_128_PRED8x8 ] = ff_pred16x16_128_dc_10_sse2;

h->pred16x16[LEFT_DC_PRED8x8 ] = ff_pred16x16_left_dc_10_sse2;

h->pred16x16[VERT_PRED8x8 ] = ff_pred16x16_vertical_10_sse2;

h->pred16x16[HOR_PRED8x8 ] = ff_pred16x16_horizontal_10_sse2;

}

if (EXTERNAL_SSSE3(cpu_flags)) {

h->pred4x4[DIAG_DOWN_RIGHT_PRED] = ff_pred4x4_down_right_10_ssse3;

h->pred4x4[VERT_RIGHT_PRED ] = ff_pred4x4_vertical_right_10_ssse3;

h->pred4x4[HOR_DOWN_PRED ] = ff_pred4x4_horizontal_down_10_ssse3;

h->pred8x8l[HOR_PRED ] = ff_pred8x8l_horizontal_10_ssse3;

h->pred8x8l[DIAG_DOWN_LEFT_PRED ] = ff_pred8x8l_down_left_10_ssse3;

h->pred8x8l[DIAG_DOWN_RIGHT_PRED] = ff_pred8x8l_down_right_10_ssse3;

h->pred8x8l[VERT_RIGHT_PRED ] = ff_pred8x8l_vertical_right_10_ssse3;

h->pred8x8l[HOR_UP_PRED ] = ff_pred8x8l_horizontal_up_10_ssse3;

}

if (EXTERNAL_AVX(cpu_flags)) {

h->pred4x4[DIAG_DOWN_LEFT_PRED ] = ff_pred4x4_down_left_10_avx;

h->pred4x4[DIAG_DOWN_RIGHT_PRED] = ff_pred4x4_down_right_10_avx;

h->pred4x4[VERT_LEFT_PRED ] = ff_pred4x4_vertical_left_10_avx;

h->pred4x4[VERT_RIGHT_PRED ] = ff_pred4x4_vertical_right_10_avx;

h->pred4x4[HOR_DOWN_PRED ] = ff_pred4x4_horizontal_down_10_avx;

h->pred8x8l[VERT_PRED ] = ff_pred8x8l_vertical_10_avx;

h->pred8x8l[HOR_PRED ] = ff_pred8x8l_horizontal_10_avx;

h->pred8x8l[DC_PRED ] = ff_pred8x8l_dc_10_avx;

h->pred8x8l[TOP_DC_PRED ] = ff_pred8x8l_top_dc_10_avx;

h->pred8x8l[DIAG_DOWN_RIGHT_PRED] = ff_pred8x8l_down_right_10_avx;

h->pred8x8l[DIAG_DOWN_LEFT_PRED ] = ff_pred8x8l_down_left_10_avx;

h->pred8x8l[VERT_RIGHT_PRED ] = ff_pred8x8l_vertical_right_10_avx;

h->pred8x8l[HOR_UP_PRED ] = ff_pred8x8l_horizontal_up_10_avx;

}

}

}

從原始碼可以看出,ff_h264_pred_init_x86()首先呼叫av_get_cpu_flags()獲取標記CPU特性的cpu_flags,然後根據cpu_flags初始化不同的函式,包括{xxx}_mmx(),{xxx}_mmxext(),{xxx}_sse(),{xxx}_sse2(),{xxx}_ssse3(),{xxx}_avx()幾種採用不同會變指令的函式。

h264_decode_end()

h264_decode_end()用於關閉FFmpeg的H.264解碼器。該函式的定義位於libavcodec\h264.c,如下所示。//關閉解碼器

static av_cold int h264_decode_end(AVCodecContext *avctx)

{

H264Context *h = avctx->priv_data;

//移除參考幀

ff_h264_remove_all_refs(h);

//釋放H264Context

ff_h264_free_context(h);

ff_h264_unref_picture(h, &h->cur_pic);

return 0;

}

從函式定義中可以看出,h264_decode_end()呼叫了ff_h264_remove_all_refs()移除了所有的參考幀,然後又呼叫了ff_h264_free_context()釋放了H264Context裡面的所有記憶體。下面看一下這兩個函式的定義。

ff_h264_remove_all_refs()

ff_h264_remove_all_refs()的定義如下所示。//移除參考幀

void ff_h264_remove_all_refs(H264Context *h)

{

int i;

//迴圈16次

//長期參考幀

for (i = 0; i < 16; i++) {

remove_long(h, i, 0);

}

assert(h->long_ref_count == 0);

//短期參考幀

for (i = 0; i < h->short_ref_count; i++) {

unreference_pic(h, h->short_ref[i], 0);

h->short_ref[i] = NULL;

}

h->short_ref_count = 0;

memset(h->default_ref_list, 0, sizeof(h->default_ref_list));

memset(h->ref_list, 0, sizeof(h->ref_list));

}

從ff_h264_remove_all_refs()的定義中可以看出,該函式呼叫了remove_long()釋放了長期參考幀,呼叫unreference_pic()釋放了短期參考幀。

ff_h264_free_context()

ff_h264_free_context()的定義如下所示。//釋放H264Context

av_cold void ff_h264_free_context(H264Context *h)

{

int i;

//釋放各種記憶體

ff_h264_free_tables(h, 1); // FIXME cleanup init stuff perhaps

//釋放SPS快取

for (i = 0; i < MAX_SPS_COUNT; i++)

av_freep(h->sps_buffers + i);

//釋放PPS快取

for (i = 0; i < MAX_PPS_COUNT; i++)

av_freep(h->pps_buffers + i);

}從ff_h264_free_context()的定義可以看出,該函式呼叫了ff_h264_free_tables()釋放H264Context中的各種記憶體。可以看一下該函式的定義。

ff_h264_free_tables()

ff_h264_free_tables()的定義如下所示。//釋放各種記憶體

void ff_h264_free_tables(H264Context *h, int free_rbsp)

{

int i;

H264Context *hx;

av_freep(&h->intra4x4_pred_mode);

av_freep(&h->chroma_pred_mode_table);

av_freep(&h->cbp_table);

av_freep(&h->mvd_table[0]);

av_freep(&h->mvd_table[1]);

av_freep(&h->direct_table);

av_freep(&h->non_zero_count);

av_freep(&h->slice_table_base);

h->slice_table = NULL;

av_freep(&h->list_counts);

av_freep(&h->mb2b_xy);

av_freep(&h->mb2br_xy);

av_buffer_pool_uninit(&h->qscale_table_pool);

av_buffer_pool_uninit(&h->mb_type_pool);

av_buffer_pool_uninit(&h->motion_val_pool);

av_buffer_pool_uninit(&h->ref_index_pool);

if (free_rbsp && h->DPB) {

for (i = 0; i < H264_MAX_PICTURE_COUNT; i++)

ff_h264_unref_picture(h, &h->DPB[i]);

memset(h->delayed_pic, 0, sizeof(h->delayed_pic));

av_freep(&h->DPB);

} else if (h->DPB) {

for (i = 0; i < H264_MAX_PICTURE_COUNT; i++)

h->DPB[i].needs_realloc = 1;

}

h->cur_pic_ptr = NULL;

for (i = 0; i < H264_MAX_THREADS; i++) {

hx = h->thread_context[i];

if (!hx)

continue;

av_freep(&hx->top_borders[1]);

av_freep(&hx->top_borders[0]);

av_freep(&hx->bipred_scratchpad);

av_freep(&hx->edge_emu_buffer);

av_freep(&hx->dc_val_base);

av_freep(&hx->er.mb_index2xy);

av_freep(&hx->er.error_status_table);

av_freep(&hx->er.er_temp_buffer);

av_freep(&hx->er.mbintra_table);

av_freep(&hx->er.mbskip_table);

if (free_rbsp) {

av_freep(&hx->rbsp_buffer[1]);

av_freep(&hx->rbsp_buffer[0]);

hx->rbsp_buffer_size[0] = 0;

hx->rbsp_buffer_size[1] = 0;

}

if (i)

av_freep(&h->thread_context[i]);

}

}

可以看出ff_h264_free_tables()呼叫了av_freep()等函式釋放了H264Context中的各個記憶體。

h264_decode_frame()

h264_decode_frame()用於解碼一幀影象資料。該函式的定義位於libavcodec\h264.c,如下所示。//H.264解碼器-解碼

static int h264_decode_frame(AVCodecContext *avctx, void *data,

int *got_frame, AVPacket *avpkt)

{

//賦值。buf對應的就是AVPacket的data

const uint8_t *buf = avpkt->data;

int buf_size = avpkt->size;

//指向AVCodecContext的priv_data

H264Context *h = avctx->priv_data;

AVFrame *pict = data;

int buf_index = 0;

H264Picture *out;

int i, out_idx;

int ret;

h->flags = avctx->flags;

/* reset data partitioning here, to ensure GetBitContexts from previous

* packets do not get used. */

h->data_partitioning = 0;

/* end of stream, output what is still in the buffers */

// Flush Decoder的時候會呼叫,此時輸入為空的AVPacket=====================

if (buf_size == 0) {

out:

h->cur_pic_ptr = NULL;

h->first_field = 0;

// FIXME factorize this with the output code below

//輸出out,源自於h->delayed_pic[]

//初始化

out = h->delayed_pic[0];

out_idx = 0;

for (i = 1;

h->delayed_pic[i] &&

!h->delayed_pic[i]->f.key_frame &&

!h->delayed_pic[i]->mmco_reset;

i++)

if (h->delayed_pic[i]->poc < out->poc) {

//輸出out,源自於h->delayed_pic[]

//逐個處理

out = h->delayed_pic[i];

out_idx = i;

}

for (i = out_idx; h->delayed_pic[i]; i++)

h->delayed_pic[i] = h->delayed_pic[i + 1];

if (out) {

out->reference &= ~DELAYED_PIC_REF;

//輸出

//out輸出到pict

//即H264Picture到AVFrame

ret = output_frame(h, pict, out);

if (ret < 0)

return ret;

*got_frame = 1;

}

return buf_index;

}

//=============================================================

if (h->is_avc && av_packet_get_side_data(avpkt, AV_PKT_DATA_NEW_EXTRADATA, NULL)) {

int side_size;

uint8_t *side = av_packet_get_side_data(avpkt, AV_PKT_DATA_NEW_EXTRADATA, &side_size);

if (is_extra(side, side_size))

ff_h264_decode_extradata(h, side, side_size);

}

if(h->is_avc && buf_size >= 9 && buf[0]==1 && buf[2]==0 && (buf[4]&0xFC)==0xFC && (buf[5]&0x1F) && buf[8]==0x67){

if (is_extra(buf, buf_size))

return ff_h264_decode_extradata(h, buf, buf_size);

}

//關鍵:解碼NALU最主要的函式

//=============================================================

buf_index = decode_nal_units(h, buf, buf_size, 0);

//=============================================================

if (buf_index < 0)

return AVERROR_INVALIDDATA;

if (!h->cur_pic_ptr && h->nal_unit_type == NAL_END_SEQUENCE) {

av_assert0(buf_index <= buf_size);

goto out;

}

if (!(avctx->flags2 & CODEC_FLAG2_CHUNKS) && !h->cur_pic_ptr) {

if (avctx->skip_frame >= AVDISCARD_NONREF ||

buf_size >= 4 && !memcmp("Q264", buf, 4))

return buf_size;

av_log(avctx, AV_LOG_ERROR, "no frame!\n");

return AVERROR_INVALIDDATA;

}

if (!(avctx->flags2 & CODEC_FLAG2_CHUNKS) ||

(h->mb_y >= h->mb_height && h->mb_height)) {

if (avctx->flags2 & CODEC_FLAG2_CHUNKS)

decode_postinit(h, 1);

ff_h264_field_end(h, 0);

/* Wait for second field. */

//設定got_frame為0

*got_frame = 0;

if (h->next_output_pic && (

h->next_output_pic->recovered)) {

if (!h->next_output_pic->recovered)

h->next_output_pic->f.flags |= AV_FRAME_FLAG_CORRUPT;

//輸出Frame

//即H264Picture到AVFrame

ret = output_frame(h, pict, h->next_output_pic);

if (ret < 0)

return ret;

//設定got_frame為1

*got_frame = 1;

if (CONFIG_MPEGVIDEO) {

ff_print_debug_info2(h->avctx, pict, h->er.mbskip_table,

h->next_output_pic->mb_type,

h->next_output_pic->qscale_table,

h->next_output_pic->motion_val,

&h->low_delay,

h->mb_width, h->mb_height, h->mb_stride, 1);

}

}

}

assert(pict->buf[0] || !*got_frame);

return get_consumed_bytes(buf_index, buf_size);

}

從原始碼可以看出,h264_decode_frame()根據輸入的AVPacket的data是否為空作不同的處理:

(1)若果輸入的AVPacket的data為空,則呼叫output_frame()輸出delayed_pic[]陣列中的H264Picture,即輸出解碼器中快取的幀(對應的是通常稱為“Flush Decoder”的功能)。下面看一下解碼壓縮編碼資料時候用到的函式decode_nal_units()。

(2)若果輸入的AVPacket的data不為空,則首先呼叫decode_nal_units()解碼AVPacket的data,然後再呼叫output_frame()輸出解碼後的視訊幀(有一點需要注意:由於幀重排等因素,輸出的AVFrame並非對應於輸入的AVPacket)。

decode_nal_units()

decode_nal_units()是用於解碼NALU的函式。函式定義位於libavcodec\h264.c,如下所示。//解碼NALU最主要的函式

//h264_decode_frame()中:

//buf一般是AVPacket->data

//buf_size一般是AVPacket->size

static int decode_nal_units(H264Context *h, const uint8_t *buf, int buf_size,

int parse_extradata)

{

AVCodecContext *const avctx = h->avctx;

H264Context *hx; ///< thread context

int buf_index;

unsigned context_count;

int next_avc;

int nals_needed = 0; ///< number of NALs that need decoding before the next frame thread starts

int nal_index;

int idr_cleared=0;

int ret = 0;

h->nal_unit_type= 0;

if(!h->slice_context_count)

h->slice_context_count= 1;

h->max_contexts = h->slice_context_count;

if (!(avctx->flags2 & CODEC_FLAG2_CHUNKS)) {

h->current_slice = 0;

if (!h->first_field)

h->cur_pic_ptr = NULL;

ff_h264_reset_sei(h);

}

//AVC1和H264的區別:

//AVC1 描述:H.264 bitstream without start codes.是不帶起始碼0x00000001的。FLV/MKV/MOV種的H.264屬於這種

//H264 描述:H.264 bitstream with start codes.是帶有起始碼0x00000001的。H.264裸流,MPEGTS種的H.264屬於這種

//

//通過VLC播放器,可以檢視到具體的格式。開啟視訊後,通過選單【工具】/【編解碼資訊】可以檢視到【編解碼器】具體格式,舉例如下,編解碼器資訊:

//編碼: H264 – MPEG-4 AVC (part 10) (avc1)

//編碼: H264 – MPEG-4 AVC (part 10) (h264)

//

if (h->nal_length_size == 4) {

if (buf_size > 8 && AV_RB32(buf) == 1 && AV_RB32(buf+5) > (unsigned)buf_size) {

//前面4位是起始碼0x00000001

h->is_avc = 0;

}else if(buf_size > 3 && AV_RB32(buf) > 1 && AV_RB32(buf) <= (unsigned)buf_size)

//前面4位是長度資料

h->is_avc = 1;

}

if (avctx->active_thread_type & FF_THREAD_FRAME)

nals_needed = get_last_needed_nal(h, buf, buf_size);

{

buf_index = 0;

context_count = 0;

next_avc = h->is_avc ? 0 : buf_size;

nal_index = 0;

for (;;) {

int consumed;

int dst_length;

int bit_length;

const uint8_t *ptr;

int nalsize = 0;

int err;

if (buf_index >= next_avc) {

nalsize = get_avc_nalsize(h, buf, buf_size, &buf_index);

if (nalsize < 0)

break;

next_avc = buf_index + nalsize;

} else {

buf_index = find_start_code(buf, buf_size, buf_index, next_avc);

if (buf_index >= buf_size)

break;

if (buf_index >= next_avc)

continue;

}

hx = h->thread_context[context_count];

//解析得到NAL(獲得nal_unit_type等資訊)

ptr = ff_h264_decode_nal(hx, buf + buf_index, &dst_length,

&consumed, next_avc - buf_index);

if (!ptr || dst_length < 0) {

ret = -1;

goto end;

}

bit_length = get_bit_length(h, buf, ptr, dst_length,

buf_index + consumed, next_avc);

if (h->avctx->debug & FF_DEBUG_STARTCODE)

av_log(h->avctx, AV_LOG_DEBUG,

"NAL %d/%d at %d/%d length %d\n",

hx->nal_unit_type, hx->nal_ref_idc, buf_index, buf_size, dst_length);

if (h->is_avc && (nalsize != consumed) && nalsize)

av_log(h->avctx, AV_LOG_DEBUG,

"AVC: Consumed only %d bytes instead of %d\n",

consumed, nalsize);

buf_index += consumed;

nal_index++;

if (avctx->skip_frame >= AVDISCARD_NONREF &&

h->nal_ref_idc == 0 &&

h->nal_unit_type != NAL_SEI)

continue;

again:

if ( !(avctx->active_thread_type & FF_THREAD_FRAME)

|| nals_needed >= nal_index)

h->au_pps_id = -1;

/* Ignore per frame NAL unit type during extradata

* parsing. Decoding slices is not possible in codec init

* with frame-mt */

if (parse_extradata) {

switch (hx->nal_unit_type) {

case NAL_IDR_SLICE:

case NAL_SLICE:

case NAL_DPA:

case NAL_DPB:

case NAL_DPC:

av_log(h->avctx, AV_LOG_WARNING,

"Ignoring NAL %d in global header/extradata\n",

hx->nal_unit_type);

// fall through to next case

case NAL_AUXILIARY_SLICE:

hx->nal_unit_type = NAL_FF_IGNORE;

}

}

err = 0;

//根據不同的 NALU Type,呼叫不同的函式

switch (hx->nal_unit_type) {

//IDR幀

case NAL_IDR_SLICE:

if ((ptr[0] & 0xFC) == 0x98) {

av_log(h->avctx, AV_LOG_ERROR, "Invalid inter IDR frame\n");

h->next_outputed_poc = INT_MIN;

ret = -1;

goto end;

}

if (h->nal_unit_type != NAL_IDR_SLICE) {

av_log(h->avctx, AV_LOG_ERROR,

"Invalid mix of idr and non-idr slices\n");

ret = -1;

goto end;

}

if(!idr_cleared)

idr(h); // FIXME ensure we don't lose some frames if there is reordering

idr_cleared = 1;

h->has_recovery_point = 1;

//注意沒有break

case NAL_SLICE:

init_get_bits(&hx->gb, ptr, bit_length);

hx->intra_gb_ptr =

hx->inter_gb_ptr = &hx->gb;

hx->data_partitioning = 0;

//解碼Slice Header

if ((err = ff_h264_decode_slice_header(hx, h)))

break;

if (h->sei_recovery_frame_cnt >= 0) {

if (h->frame_num != h->sei_recovery_frame_cnt || hx->slice_type_nos != AV_PICTURE_TYPE_I)

h->valid_recovery_point = 1;

if ( h->recovery_frame < 0

|| ((h->recovery_frame - h->frame_num) & ((1 << h->sps.log2_max_frame_num)-1)) > h->sei_recovery_frame_cnt) {

h->recovery_frame = (h->frame_num + h->sei_recovery_frame_cnt) &

((1 << h->sps.log2_max_frame_num) - 1);

if (!h->valid_recovery_point)

h->recovery_frame = h->frame_num;

}

}

h->cur_pic_ptr->f.key_frame |=

(hx->nal_unit_type == NAL_IDR_SLICE);

if (hx->nal_unit_type == NAL_IDR_SLICE ||

h->recovery_frame == h->frame_num) {

h->recovery_frame = -1;

h->cur_pic_ptr->recovered = 1;

}

// If we have an IDR, all frames after it in decoded order are

// "recovered".

if (hx->nal_unit_type == NAL_IDR_SLICE)

h->frame_recovered |= FRAME_RECOVERED_IDR;

h->frame_recovered |= 3*!!(avctx->flags2 & CODEC_FLAG2_SHOW_ALL);

h->frame_recovered |= 3*!!(avctx->flags & CODEC_FLAG_OUTPUT_CORRUPT);

#if 1

h->cur_pic_ptr->recovered |= h->frame_recovered;

#else

h->cur_pic_ptr->recovered |= !!(h->frame_recovered & FRAME_RECOVERED_IDR);

#endif

if (h->current_slice == 1) {

if (!(avctx->flags2 & CODEC_FLAG2_CHUNKS))

decode_postinit(h, nal_index >= nals_needed);

if (h->avctx->hwaccel &&

(ret = h->avctx->hwaccel->start_frame(h->avctx, NULL, 0)) < 0)

return ret;

if (CONFIG_H264_VDPAU_DECODER &&

h->avctx->codec->capabilities & CODEC_CAP_HWACCEL_VDPAU)

ff_vdpau_h264_picture_start(h);

}

if (hx->redundant_pic_count == 0) {

if (avctx->hwaccel) {

ret = avctx->hwaccel->decode_slice(avctx,

&buf[buf_index - consumed],

consumed);

if (ret < 0)

return ret;

} else if (CONFIG_H264_VDPAU_DECODER &&

h->avctx->codec->capabilities & CODEC_CAP_HWACCEL_VDPAU) {

ff_vdpau_add_data_chunk(h->cur_pic_ptr->f.data[0],

start_code,

sizeof(start_code));

ff_vdpau_add_data_chunk(h->cur_pic_ptr->f.data[0],

&buf[buf_index - consumed],

consumed);

} else

context_count++;

}

break;

case NAL_DPA:

if (h-&g