使用apache和nginx代理實現tomcat負載均衡及叢集配置詳解

阿新 • • 發佈:2019-01-25

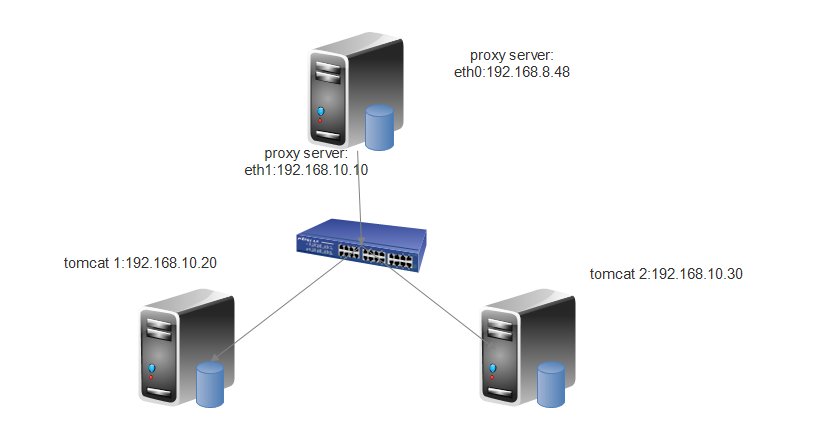

實驗環境:

1、nginx的代理功能

nginx proxy:

eth0: 192.168.8.48

vmnet2 eth1: 192.168.10.10

tomcat server1:

vmnet2 eth0: 192.168.10.20

tomcat server2:

vmnet2 eth0: 192.168.10.30

# yum install -y nginx-1.8.1-1.el6.ngx.x86_64.rpm

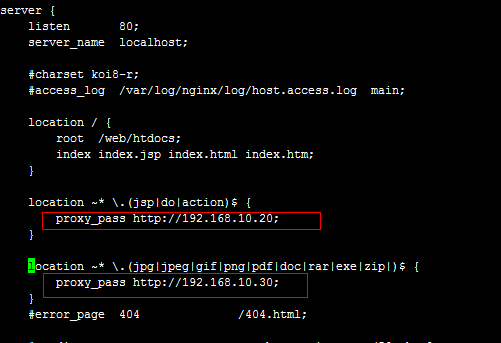

# vim /etc/nginx/conf.d/default.conf

location / {

root /web/htdocs;

index index.jsp index.html index.htm;

}

location ~* \.(jsp|do|action)$ {

proxy_pass http://192.168.10.20;

}

location ~* \.(jpg|jpeg|gif|png|pdf|doc|rar|exe|zip|)$ {

proxy_pass http://192.168.10.30;

}

2、apache的代理功能

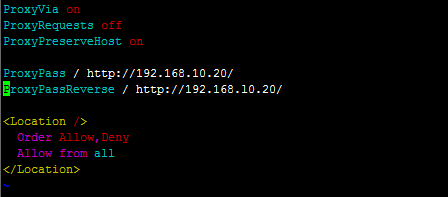

①http方式的代理

# cd /etc/httpd/conf.d/# vim mod_proxy.conf

加入如下內容:

ProxyRequests off

ProxyPreserveHost on

ProxyPass / http://192.168.10.20/

ProxyPassReverse / http://192.168.l0.20/

<Location />

Order Allow,Deny

Allow from all

</Location>

②ajp方式的代理

ProxyVia onProxyRequests off

ProxyPreserveHost on

ProxyPass / ajp://192.168.10.20/

ProxyPassReverse / ajp://192.168.l0.20/

<Location />

Order Allow,Deny

Allow from all

</Location>

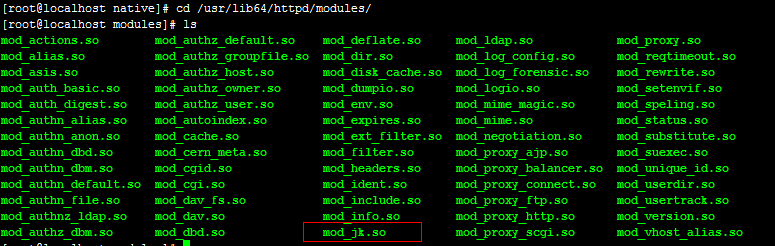

②、配置apache基於mod_jk的負載均衡

a.安裝apxs外掛

# yum install -y httpd-devel

# tar xf tomcat-connectors-1.2.37-src.tar.gz

# cd tomcat-connectors-1.2.37-src/native

# ./configure --with-apxs=/usr/sbin/apxs

# make && make install

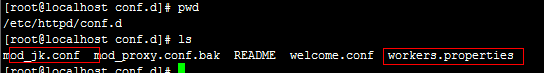

配置相關檔案

# cd /etc/httpd/conf.d

# vim mod_jk.conf

LoadModule jk_module modules/mod_jk.so

JkWorkersFile /etc/httpd/conf.d/workers.properties

JkLogFile logs/mod_jk.log

JkLogLevel notice

JkMount /* TomcatA

JkMount /status/ statA

# vim workers.properties

worker.list=TomcatA,statA

worker.TomcatA.type=ajp13

worker.ToccatA.port=8009

worker.TomcatA.host=192.168.10.20

worker.TomcatA.lbfactor=1

worker.statA.type=status

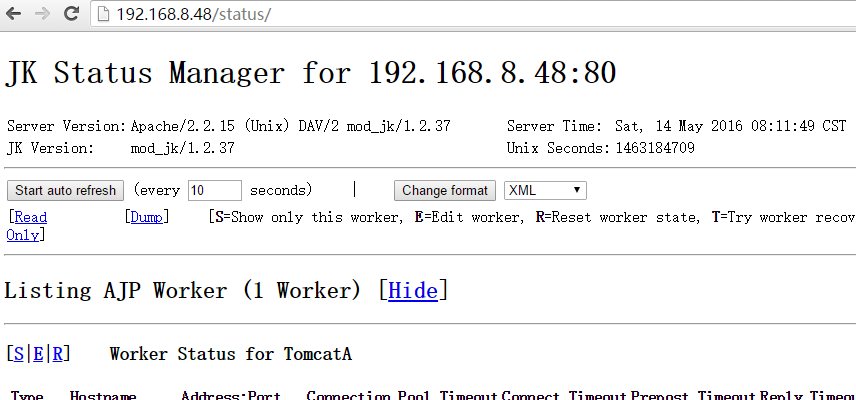

訪問如下地址,可以看到tomcat server1的狀態

http://192.168.8.48/status/

③使用mod_jk配置負載均衡伺服器

配置代理伺服器

# cd /etc/httpd/conf.d

# cat mod_jk.conf

LoadModule jk_module modules/mod_jk.so

JkWorkersFile /etc/httpd/conf.d/workers.properties

JkLogFile logs/mod_jk.log

JkLogLevel notice

JkMount /* lbcA

JkMount /status/ statA

# cat workers.properties

worker.list=lbcA,statA

worker.TomcatA.type=ajp13

worker.TomcatA.port=8009

worker.TomcatA.host=192.168.10.20

worker.TomcatA.lbfactor=1

worker.TomcatB.type=ajp13

worker.TomcatB.port=8009

worker.TomcatB.host=192.168.10.30

worker.TomcatB.lbfactor=1

worker.lbcA.type=lb

worker.lbcA.sticky_session=1

worker.lbcA.balance_workers=TomcatA,TomcatB

worker.statA.type=status

配置後端tomcat

# cd /usr/local/tomcat/webapps/

# mkdir testapp

# cd testapp/

# mkdir -pv WEB-INF/{classes,lib}

mkdir: created directory `WEB-INF'

mkdir: created directory `WEB-INF/classes'

mkdir: created directory `WEB-INF/lib'

# vim index.jsp

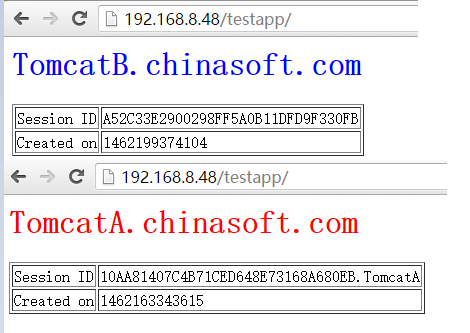

TOMCATA伺服器

index.jsp:

<%@ page language="java" %>

<html>

<head><title>TomcatA</title></head>

<body>

<h1><font color="red">TomcatA.chinasoft.com</font></h1>

<table align="centre" border="1">

<tr>

<td>Session ID</td>

<% session.setAttribute("chinasoft.com.com","chinasoft.com"); %>

<td><%= session.getId() %></td>

</tr>

<tr>

<td>Created on</td>

<td><%= session.getCreationTime() %></td>

</tr>

</table>

</body>

</html>

TOMCATB伺服器

index.jsp:

<%@ page language="java" %>

<html>

<head><title>TomcatB</title></head>

<body>

<h1><font color="blue">TomcatB.chinasoft.com</font></h1>

<table align="centre" border="1">

<tr>

<td>Session ID</td>

<% session.setAttribute("chinasoft.com","chinasoft.com"); %>

<td><%= session.getId() %></td>

</tr>

<tr>

<td>Created on</td>

<td><%= session.getCreationTime() %></td>

</tr>

</table>

</body>

</html>

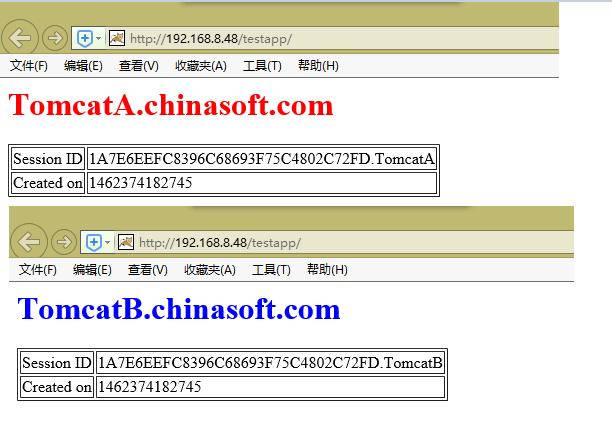

測試是否OK

# curl http://192.168.10.20:8080/testapp/index.jsp

# curl http://192.168.10.30:8080/testapp/index.jsp

配置session會話繫結(會破壞負載均衡效果):

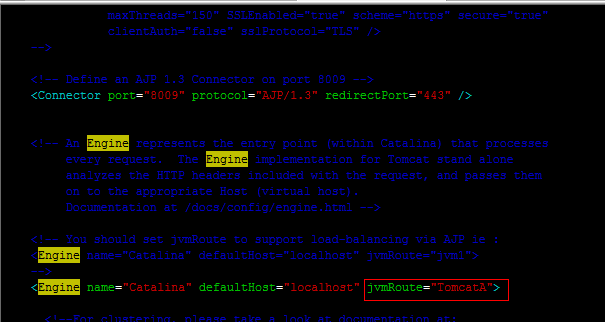

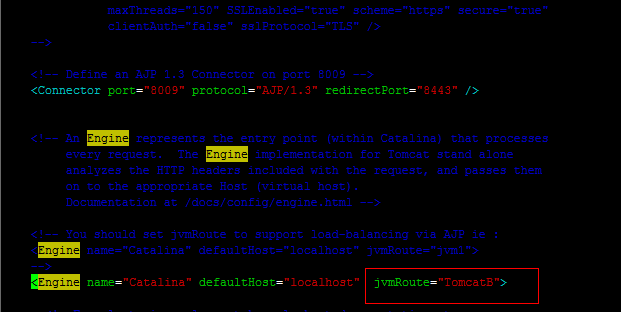

負載均衡,且實現會話繫結要注意給每個tomcat例項的egine容器一個jvmRoute屬性!此名稱要跟前端排程模組使用名稱保持一致!

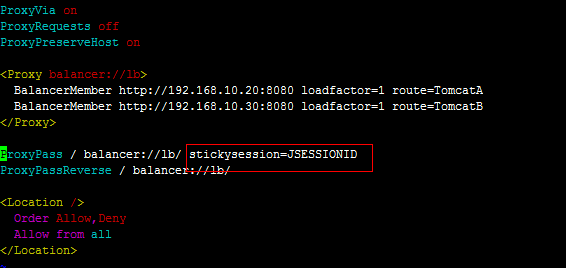

另外,在mod_proxy實現負載均衡的會話繫結時,還要使用sticksession=JSESSIONID(字元要大寫)!

worker.properties:

worker.TomcatB.lbfactor=1

在後端tomcat伺服器server.xml檔案中定義jvmRoute:

<Engine name="Catalina" defaultHost="localhost" jvmRoute="TomcatA">

④.apache mod_proxy實現基於http的負載均衡配置:

# mv mod_jk.conf mod_jk.conf.bak

# mv mod_proxy.conf.bak mod_proxy.conf

# vim mod_proxy.conf

ProxyVia on

ProxyRequests off

ProxyPreserveHost on

<Proxy balancer://lb>

BalancerMember http://192.168.10.20:8080 loadfactor=1 route=TomcatA

BalancerMember http://192.168.10.30:8080 loadfactor=1 route=TomcatB

</Proxy>

ProxyPass / balancer://lb/

ProxyPassReverse / balancer://lb/

<Location />

Order Allow,Deny

Allow from all

</Location>

⑤.配置session會話的永續性

ProxyVia on

ProxyRequests off

ProxyPreserveHost on

<Proxy balancer://lb>

BalancerMember http://192.168.10.20:8080 loadfactor=1 route=TomcatA

BalancerMember http://192.168.10.30:8080 loadfactor=1 route=TomcatB

</Proxy>

ProxyPass / balancer://lb/ stickysession=JSESSIONID

ProxyPassReverse / balancer://lb/

<Location />

Order Allow,Deny

Allow from all

</Location>

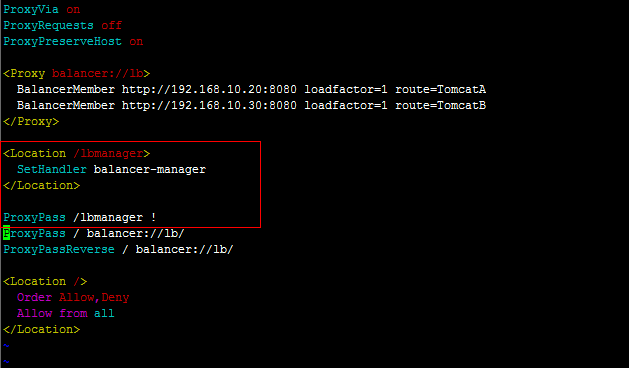

⑥.配置管理介面

ProxyVia onProxyRequests off

ProxyPreserveHost on

<Proxy balancer://lb>

BalancerMember http://192.168.10.20:8080 loadfactor=1 route=TomcatA

BalancerMember http://192.168.10.30:8080 loadfactor=1 route=TomcatB

</Proxy>

<Location /lbmanager>

SetHandler balancer-manager

</Location>

ProxyPass /lbmanager !

ProxyPass / balancer://lb/

ProxyPassReverse / balancer://lb/

<Location />

Order Allow,Deny

Allow from all

</Location>

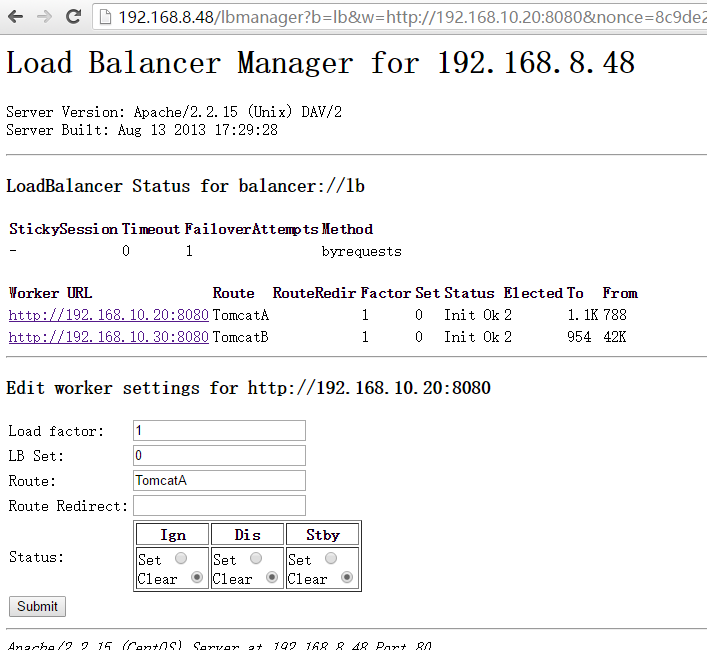

訪問:http://192.168.8.48/lbmanager,可以看到負載均衡的狀態介面

叢集配置:

負載均衡器:

192.168.10.10:# vim mod_proxy.conf

ProxyVia on

ProxyRequests off

ProxyPreserveHost on

<Proxy balancer://lb>

BalancerMember http://192.168.10.20:8080 loadfactor=1 route=TomcatA

BalancerMember http://192.168.10.30:8080 loadfactor=1 route=TomcatB

</Proxy>

<Location /lbmanager>

SetHandler balancer-manager

</Location>

ProxyPass /lbmanager !

ProxyPass / balancer://lb/

ProxyPassReverse / balancer://lb/

<Location />

Order Allow,Deny

Allow from all

</Location>192.168.10.20:

<Engine name="Catalina" defaultHost="localhost" jvmRoute="TomcatA">

<Cluster className="org.apache.catalina.ha.tcp.SimpleTcpCluster"

channelSendOptions="8">

<Manager className="org.apache.catalina.ha.session.DeltaManager"

expireSessionsOnShutdown="false"

notifyListenersOnReplication="true"/>

<Channel className="org.apache.catalina.tribes.group.GroupChannel">

<Membership className="org.apache.catalina.tribes.membership.McastService"

address="228.1.0.4"

port="45564"

frequency="500"

dropTime="3000"/>

<Receiver className="org.apache.catalina.tribes.transport.nio.NioReceiver"

address="192.168.10.20"

port="4000"

autoBind="100"

selectorTimeout="5000"

maxThreads="6"/>

<Sender className="org.apache.catalina.tribes.transport.ReplicationTransmitter">

<Transport className="org.apache.catalina.tribes.transport.nio.PooledParallelSender"/>

</Sender>

<Interceptor className="org.apache.catalina.tribes.group.interceptors.TcpFailureDetector"/>

<Interceptor className="org.apache.catalina.tribes.group.interceptors.MessageDispatch15Interceptor"/>

</Channel>

<Valve className="org.apache.catalina.ha.tcp.ReplicationValve"

filter=""/>

<Valve className="org.apache.catalina.ha.session.JvmRouteBinderValve"/>

<Deployer className="org.apache.catalina.ha.deploy.FarmWarDeployer"

tempDir="/tmp/war-temp/"

deployDir="/tmp/war-deploy/"

watchDir="/tmp/war-listen/"

watchEnabled="false"/>

<ClusterListener className="org.apache.catalina.ha.session.JvmRouteSessionIDBinderListener"/>

<ClusterListener className="org.apache.catalina.ha.session.ClusterSessionListener"/>

</Cluster>192.168.10.30:

<Engine name="Catalina" defaultHost="localhost" jvmRoute="TomcatB">

<Cluster className="org.apache.catalina.ha.tcp.SimpleTcpCluster"

channelSendOptions="8">

<Manager className="org.apache.catalina.ha.session.DeltaManager"

expireSessionsOnShutdown="false"

notifyListenersOnReplication="true"/>

<Channel className="org.apache.catalina.tribes.group.GroupChannel">

<Membership className="org.apache.catalina.tribes.membership.McastService"

address="228.1.0.4"

port="45564"

frequency="500"

dropTime="3000"/>

<Receiver className="org.apache.catalina.tribes.transport.nio.NioReceiver"

address="192.168.10.30"

port="4000"

autoBind="100"

selectorTimeout="5000"

maxThreads="6"/>

<Sender className="org.apache.catalina.tribes.transport.ReplicationTransmitter">

<Transport className="org.apache.catalina.tribes.transport.nio.PooledParallelSender"/>

</Sender>

<Interceptor className="org.apache.catalina.tribes.group.interceptors.TcpFailureDetector"/>

<Interceptor className="org.apache.catalina.tribes.group.interceptors.MessageDispatch15Interceptor"/>

</Channel>

<Valve className="org.apache.catalina.ha.tcp.ReplicationValve"

filter=""/>

<Valve className="org.apache.catalina.ha.session.JvmRouteBinderValve"/>

<Deployer className="org.apache.catalina.ha.deploy.FarmWarDeployer"

tempDir="/tmp/war-temp/"

deployDir="/tmp/war-deploy/"

watchDir="/tmp/war-listen/"

watchEnabled="false"/>

<ClusterListener className="org.apache.catalina.ha.session.JvmRouteSessionIDBinderListener"/>

<ClusterListener className="org.apache.catalina.ha.session.ClusterSessionListener"/>

</Cluster>

並在各叢集節點上新增路由資訊:

route add -net 228.1.0.4 netmask 255.255.255.255 dev eth0

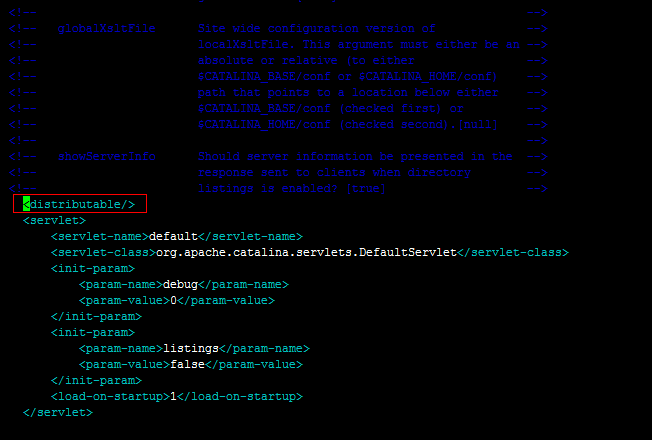

並在:

web.xml節點上新增:

<distributable/>

可以看到session id沒有變化