tensorflow專案學習(1)——訓練自己的資料集並進行物體檢測(object detection)

Tensorflow Object Detection

前言

本文主要介紹如何利用官方庫tensorflow/models/research/objection

並通過faster rcnn resnet 101(以及其他)深度學習框架

訓練自己的資料集,並對訓練結果進行檢測和評估

準備工作

1. 準備自己的資料集

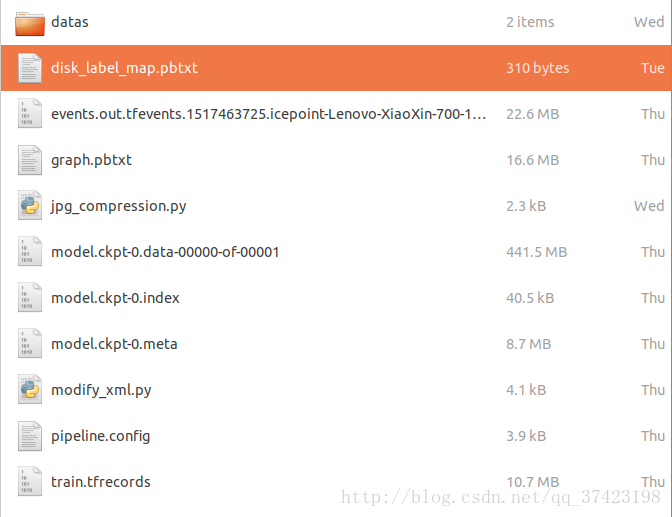

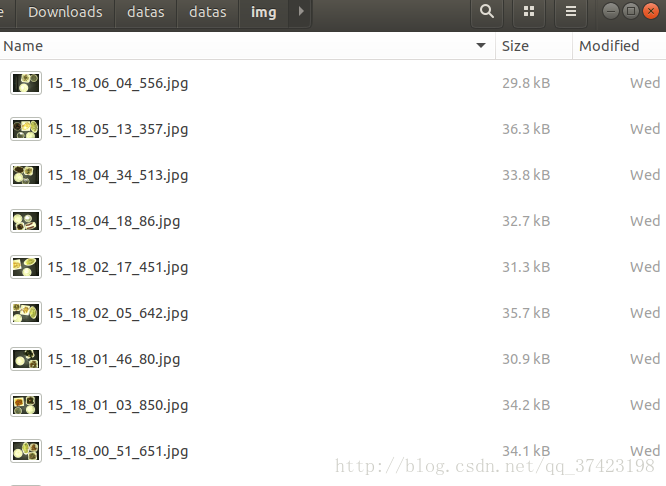

資料集檔案目錄如下

datas/

datas/

img/

xml/

disk_label_map.pbtxtimg/目錄下為資料集圖片

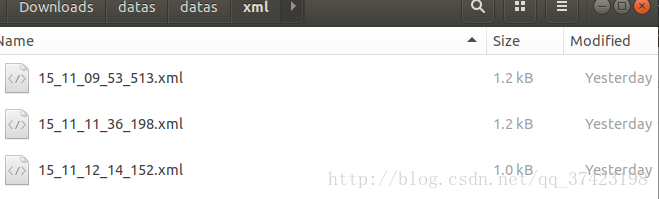

xml/目錄下為圖片對應的資訊

15_11_09_53_513.xml

<?xml version="1.0" encoding="utf-8"?>

<annotation>

<folder>datas</folder>

<filename>jpg</filename>

<source>

<database>Unknown</database>

</source>

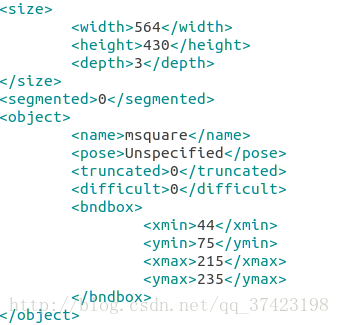

<size>

<width>564</width>

<height (其中object為檢測到的物體,name代表物體類別與disk_label_map.pbtxt中指定的一致,bndbox檢測到的區域)

disk_label_map.pbtxt

item {

id: 1

name: 'rice'

}

item {

id: 2

name: 'soup'

}

item {

id: 3

name: 'rect'

}

item {

id: 4

name: 'lcir'

}

item {

id: 5

name: 'ssquare'

}

item {

id: 6

name: 'msquare'

}

item {

id: 7

name: 'lsquare'

}

item {

id: 8

name: 'bsquare'

}

item {

id: 9

name: 'ellipse'

}2.安裝tensorflow-gpu

$ sudo apt-get install python-virtualenv

$ virtualenv --system-site-packages tensorflow (在~目錄下建立獨立執行環境)

$ source ~/tensorflow/bin/activate (啟用tensorflow執行環境,以後每次執行該環境下的專案,都要啟用)

$ pip install --upgrade tensorflow-gpu通過import tensorflow驗證安裝

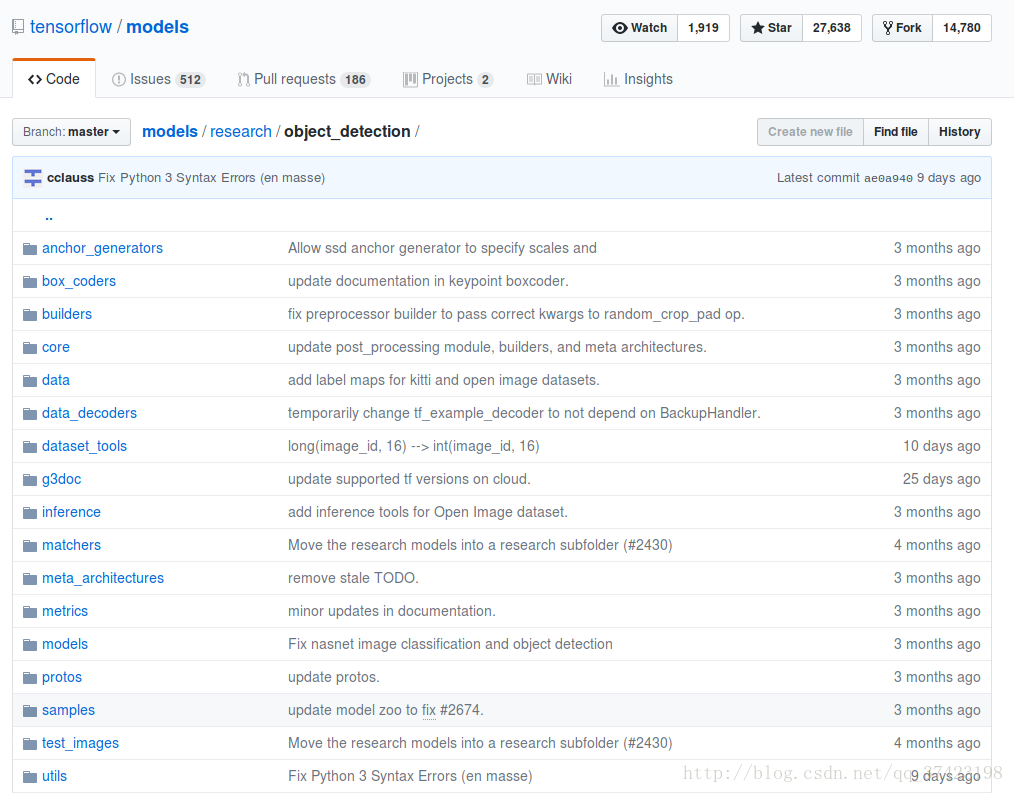

3.下載tensorflow/models倉庫

$ git clone https://github.com/tensorflow/models.git下載速度較慢,建議翻牆

之後把下載好的檔案解壓到~/tensorflow/目錄下

4.安裝object_detection專案

安裝依賴庫

$ sudo apt-get install protobuf-compiler

$ sudo pip install pillow

$ sudo pip install lxml

$ sudo pip install jupyter

$ sudo pip install matplotlib編譯protobuf

# From tensorflow/models/research/

protoc object_detection/protos/*.proto --python_out=.區域性執行時,把library加入PYTHONPATH

# From tensorflow/models/research/

export PYTHONPATH=$PYTHONPATH:`pwd`:`pwd`/slim測試安裝是否成功

python object_detection/builders/model_builder_test.py5.下載faster rcnn resnet101 coco model

訓練工作

1.處理訓練集

對於訓練過程中影象畫素越大可能訓練神經網路引數消耗的CPU,佔用的記憶體就會越大,

一般4核,8G訓練500*500左右畫素大小的幾百張圖片比較適合

壓縮圖片大小:(將datas資料集複製一份命名為datas1放置於與datas同目錄下)

jpg_compression.py

# /home/user/Downloads/datas/jpg_compression.py

from PIL import Image

import os

import sys

# Define images type to detect

valid_file_type = ['.jpg','.jpeg']

# Define compression ratio

SIZE_normal = 1.0

SIZE_small = 1.5

SIZE_more_small = 2.0

SIZE_much_more_small = 3.0

def make_directory(directory):

"""Make dir"""

os.makedirs(directory)

def directory_exists(directory):

"""If this dir exists"""

if os.path.exists(directory):

return True

else:

return False

def list_img_file(directory):

"""List all the files, choose and return jpg files"""

old_list = os.listdir(directory)

# print old_list

new_list = []

for filename in old_list:

f, e = os.path.splitext(filename)

if e in valid_file_type:

new_list.append(filename)

else:

pass

# print new_list

return new_list

def print_help():

print """

This program helps compress many image files

you can choose which scale you want to compress your img(jpg/etc)

1) normal compress(4M to 1M around)

2) small compress(4M to 500K around)

3) smaller compress(4M to 300K around)

4) much smaller compress(4M to ...)

"""

def compress(choose, src_dir, des_dir, file_list):

"""Compression Algorithm,img.thumbnail"""

if choose == '1':

scale = SIZE_normal

if choose == '2':

scale = SIZE_small

if choose == '3':

scale = SIZE_more_small

if choose == '4':

scale = SIZE_much_more_small

for infile in file_list:

filename = os.path.join(src_dir, infile)

img = Image.open(filename)

# size_of_file = os.path.getsize(infile)

w, h = img.size

img.thumbnail((int(w/scale), int(h/scale)))

img.save(des_dir + '/' + infile)

if __name__ == "__main__":

src_dir, des_dir = sys.argv[1], sys.argv[2]

if directory_exists(src_dir):

if not directory_exists(des_dir):

make_directory(des_dir)

# business logic

file_list = list_img_file(src_dir)

# print file_list

if file_list:

print_help()

choose = raw_input("enter your choice:")

compress(choose, src_dir, des_dir, file_list)

else:

pass

else:

print "source directory not exist!"執行命令

python jpg_compression.py \

> --src_dir=/home/user/Downloads/datas1/datas/img/

> --des_dir=/home/user/Downloads/datas/datas/img/根據壓縮圖片比例改變xml檔案內容

因為xml檔案有記錄對應影象畫素大小,以及檢測物體區域位置,所以要更改這些值

modify_xml.py(縮小的是三倍)

from PIL import Image

from xml.dom import minidom

import os

import sys

if __name__ == "__main__":

src_dir, des_dir = sys.argv[1], sys.argv[2]

file_list = os.listdir(src_dir)

for file_name in file_list:

xml_name = os.path.join(src_dir, file_name)

with open(xml_name, 'r') as fh:

dom = minidom.parse(fh)

root = dom.documentElement

# print root.nodeName

sizeNode = root.getElementsByTagName('size')[0]

# print size.nodeName

widthNode = sizeNode.getElementsByTagName('width')[0]

value = widthNode.childNodes[0].nodeValue.encode('gbk')

value_int = int(value)/3

value = str(value_int)

value = value.decode('utf-8')

widthNode.childNodes[0].nodeValue = value

#print widthNode.childNodes[0].nodeValue

heightNode = sizeNode.getElementsByTagName('height')[0]

value = heightNode.childNodes[0].nodeValue.encode('gbk')

value_int = int(value)/3

value = str(value_int)

value = value.decode('utf-8')

heightNode.childNodes[0].nodeValue = value

objectNodes = root.getElementsByTagName('object')

for idx,subNode in enumerate(objectNodes):

bndboxNode = subNode.getElementsByTagName('bndbox')[0]

#print bndboxNode

minxNode = bndboxNode.getElementsByTagName('xmin')[0]

val = minxNode.childNodes[0].nodeValue.encode('gbk')

val_int = int(val)/3

val = str(val_int)

val = val.decode('utf-8')

minxNode.childNodes[0].nodeValue = val

minyNode = bndboxNode.getElementsByTagName('ymin')[0]

val = minyNode.childNodes[0].nodeValue.encode('gbk')

val_int = int(val)/3

val = str(val_int)

val = val.decode('utf-8')

minyNode.childNodes[0].nodeValue = val

maxxNode = bndboxNode.getElementsByTagName('xmax')[0]

val = maxxNode.childNodes[0].nodeValue.encode('gbk')

val_int = int(val)/3

val = str(val_int)

val = val.decode('utf-8')

maxxNode.childNodes[0].nodeValue = val

maxyNode = bndboxNode.getElementsByTagName('ymax')[0]

val = maxyNode.childNodes[0].nodeValue.encode('gbk')

val_int = int(val)/3

val = str(val_int)

val = val.decode('utf-8')

maxyNode.childNodes[0].nodeValue = val

# print maxxNode.childNodes[0].nodeValue

bndboxNode.replaceChild(bndboxNode.getElementsByTagName('xmin')[0], minxNode)

bndboxNode.replaceChild(bndboxNode.getElementsByTagName('ymin')[0], minyNode)

bndboxNode.replaceChild(bndboxNode.getElementsByTagName('xmax')[0], maxxNode)

bndboxNode.replaceChild(bndboxNode.getElementsByTagName('ymax')[0], maxyNode)

objectNodes[idx].replaceChild(objectNodes[idx].getElementsByTagName('bndbox')[0], bndboxNode)

dom.documentElement.replaceChild(dom.documentElement.getElementsByTagName('object')[idx], objectNodes[idx])

sizeNode.replaceChild(sizeNode.getElementsByTagName('width')[0], widthNode)

sizeNode.replaceChild(sizeNode.getElementsByTagName('height')[0], heightNode)

dom.documentElement.replaceChild(dom.documentElement.getElementsByTagName('size')[0], sizeNode)

des_path = os.path.join(des_dir, file_name)

# print des_path

f = open(des_path, 'w')

dom.writexml(f,encoding = 'utf-8' )

f.close()

# print dom.documentElement.getElementsByTagName('size')[0].getElementsByTagName('height')[0].childNodes[0].nodeValue執行檔案

python modify_xml.py \

> --src_dir=/home/user/Downloads/datas1/datas/xml/

> --des_dir=/home/user/Downloads/datas/datas/xml/2.修改介面檔案

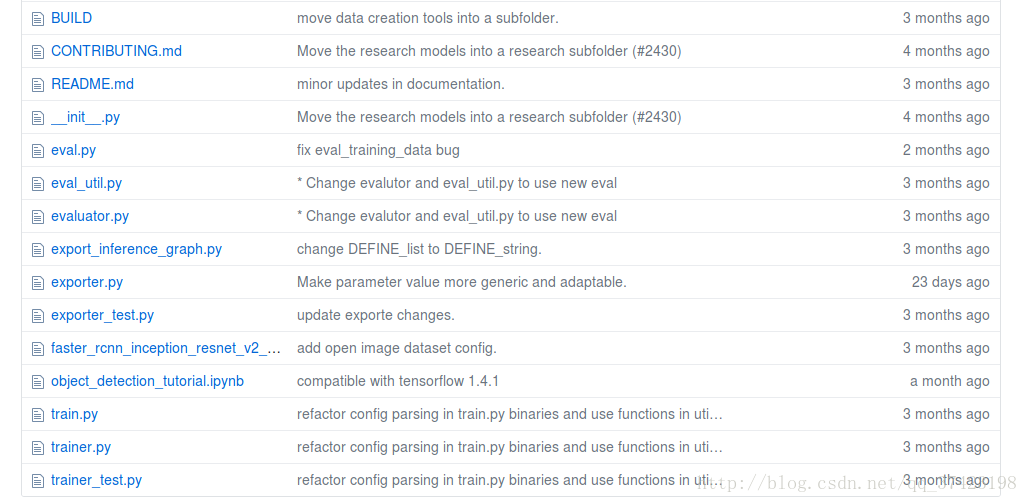

首先先熟悉一下這個object_detection專案需要修改的檔案的作用

eval.py可執行檔案用於測試評估訓練資料

train.py可執行檔案用於訓練給定的record檔案中的資料

export_inference_graph.py用於把訓練出的ckpt檔案轉換成pb檔案可供測試

samples/config/從中選出訓練所用的神經網路框架的配置檔案

dataset_tools/create…tf_record.py可執行檔案用於把資料集導成record檔案待訓練

object_detection_tutorial.ipynb在jupyter notebook裡執行用於測試,檢視圖片檢驗效果

create_disk_tf_record.py

轉化資料為train.tfrecords檔案

from __future__ import absolute_import

from __future__ import division

from __future__ import print_function

import hashlib

import io

import logging

import os

from lxml import etree

import PIL.Image

import tensorflow as tf

from object_detection.utils import dataset_util

from object_detection.utils import label_map_util

flags = tf.app.flags

flags.DEFINE_string('data_dir', '', 'Root directory to dataset')

flags.DEFINE_string('images_dir', '', 'Path to images directory')

flags.DEFINE_string('annotations_dir', '', 'Path to annotations directory')

flags.DEFINE_string('output_path', '', 'Path to output TFRecord')

flags.DEFINE_string('label_map_path', '', 'Path to label map proto')

flags.DEFINE_boolean('ignore_difficult_instances', False, 'Whether to ignore difficult instances')

FLAGS = flags.FLAGS

def dict_to_tf_example(data,

dataset_directory,

image_directory,

label_map_dict,

ignore_difficult_instances=False):

"""Convert XML derived dict to tf.Example proto.

Notice that this function normalizes the bounding box coordinates provided

by the raw data.

Args:

data: dict holding PASCAL XML fields for a single image (obtained by

running dataset_util.recursive_parse_xml_to_dict)

label_map_dict: A map from string label names to integers ids.

ignore_difficult_instances: Whether to skip difficult instances in the

dataset (default: False).

Returns:

example: The converted tf.Example.

Raises:

ValueError: if the image pointed to by data['filename'] is not a valid JPEG

"""

img_path = os.path.join(dataset_directory, image_directory, data['filename'])

with tf.gfile.GFile(img_path, 'rb') as fid:

encoded_jpg = fid.read()

encoded_jpg_io = io.BytesIO(encoded_jpg)

image = PIL.Image.open(encoded_jpg_io)

if image.format != 'JPEG':

raise ValueError('Image format not JPEG')

key = hashlib.sha256(encoded_jpg).hexdigest()

width = int(data['size']['width'])

height = int(data['size']['height'])

xmin = []

ymin = []

xmax = []

ymax = []

classes = []

classes_text = []

truncated = []

poses = []

difficult_obj = []

for obj in data['object']:

difficult = bool(int(obj['difficult']))

if ignore_difficult_instances and difficult:

continue

difficult_obj.append(int(difficult))

xmin.append(float(obj['bndbox']['xmin']) / width)

ymin.append(float(obj['bndbox']['ymin']) / height)

xmax.append(float(obj['bndbox']['xmax']) / width)

ymax.append(float(obj['bndbox']['ymax']) / height)

classes_text.append(obj['name'].encode('utf8'))

classes.append(label_map_dict[obj['name']])

truncated.append(int(obj['truncated']))

poses.append(obj['pose'].encode('utf8'))

example = tf.train.Example(features=tf.train.Features(feature={

'image/height': dataset_util.int64_feature(height),

'image/width': dataset_util.int64_feature(width),

'image/filename': dataset_util.bytes_feature(

data['filename'].encode('utf8')),

'image/source_id': dataset_util.bytes_feature(

data['filename'].encode('utf8')),

'image/key/sha256': dataset_util.bytes_feature(key.encode('utf8')),

'image/encoded': dataset_util.bytes_feature(encoded_jpg),

'image/format': dataset_util.bytes_feature('jpeg'.encode('utf8')),

'image/object/bbox/xmin': dataset_util.float_list_feature(xmin),

'image/object/bbox/xmax': dataset_util.float_list_feature(xmax),

'image/object/bbox/ymin': dataset_util.float_list_feature(ymin),

'image/object/bbox/ymax': dataset_util.float_list_feature(ymax),

'image/object/class/text': dataset_util.bytes_list_feature(classes_text),

'image/object/class/label': dataset_util.int64_list_feature(classes),

'image/object/difficult': dataset_util.int64_list_feature(difficult_obj),

'image/object/truncated': dataset_util.int64_list_feature(truncated),

'image/object/view': dataset_util.bytes_list_feature(poses),

}))

return example

def main(_):

data_dir = FLAGS.data_dir

writer = tf.python_io.TFRecordWriter(FLAGS.output_path)

label_map_dict = label_map_util.get_label_map_dict(FLAGS.label_map_path)

logging.info('Reading from dataset.')

images_dir = os.path.join(data_dir, FLAGS.images_dir)

images_path = os.listdir(images_dir)

annotations_dir = os.path.join(data_dir, FLAGS.annotations_dir)

examples_list = [os.path.splitext(x)[0] for x in images_path]

for idx, example in enumerate(examples_list):

if idx % 10 == 0:

logging.info('On image %d of %d', idx, len(examples_list))

path = os.path.join(annotations_dir, example + '.xml')

with tf.gfile.GFile(path, 'r') as fid:

xml_str = fid.read()

xml = etree.fromstring(xml_str)

data = dataset_util.recursive_parse_xml_to_dict(xml)['annotation']

tf_example = dict_to_tf_example(data, FLAGS.data_dir, FLAGS.images_dir, label_map_dict,

FLAGS.ignore_difficult_instances)

writer.write(tf_example.SerializeToString())

writer.close()

if __name__ == '__main__':

tf.app.run()

通過tf.app來傳入外部引數

通過tf.train.example來把資料導成tf_example,然後序列化寫入tfrecords檔案

執行主要是5個引數

# From tensorflow/models/research/

python object_detection/dataset_tools/create_disk_tf_record.py \

> --data_dir=/home/icepoint/Downloads/datas/datas/ \

> --images_dir=img/ \

> --annotations_dir=xml/ \

> --output_path=/home/icepoint/Downloads/datas/train.tfrecords \

> --label_map_path=/home/icepoint/Downloads/datas/disk_label_map.pbtxt train.py

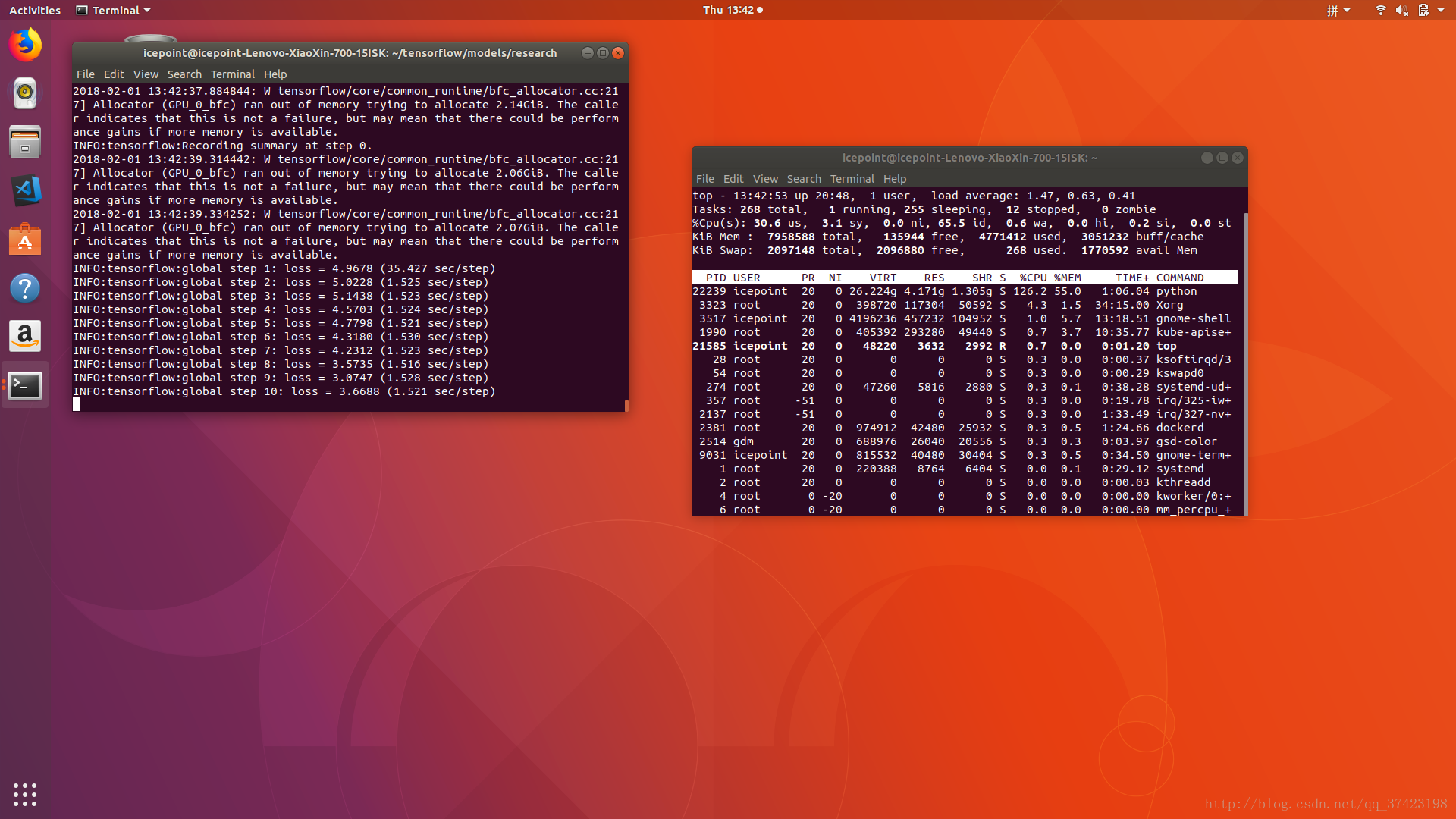

執行檔案(前面加以下指定的裝置,以防報錯)

$ CUDA_VISIBLE_DEVICE=0 python object_detection/train.py \

--logtostderr \

--train_dir=/home/icepoint/Downloads/datas/ \

--pipeline_config_path=/home/icepoint/tensorflow/models/research/object_detection/samples/configs/faster_rcnn_resnet101_pets.config 指定訓練目錄,之後會把一系列訓練好的檔案存在那個目錄上

指定配置檔案

配置檔案

faster_rcnn_resnet101_pets.config

# Faster R-CNN with Resnet-101 (v1) configured for the Oxford-IIIT Pet Dataset.

# Users should configure the fine_tune_checkpoint field in the train config as

# well as the label_map_path and input_path fields in the train_input_reader and

# eval_input_reader. Search for "PATH_TO_BE_CONFIGURED" to find the fields that

# should be configured.

model {

faster_rcnn {

num_classes: 37

image_resizer {

keep_aspect_ratio_resizer {

min_dimension: 600

max_dimension: 1024

}

}

feature_extractor {

type: 'faster_rcnn_resnet101'

first_stage_features_stride: 16

}

first_stage_anchor_generator {

grid_anchor_generator {

scales: [0.25, 0.5, 1.0, 2.0]

aspect_ratios: [0.5, 1.0, 2.0]

height_stride: 16

width_stride: 16

}

}

first_stage_box_predictor_conv_hyperparams {

op: CONV

regularizer {

l2_regularizer {

weight: 0.0

}

}

initializer {

truncated_normal_initializer {

stddev: 0.01

}

}

}

first_stage_nms_score_threshold: 0.0

first_stage_nms_iou_threshold: 0.7

first_stage_max_proposals: 300

first_stage_localization_loss_weight: 2.0

first_stage_objectness_loss_weight: 1.0

initial_crop_size: 14

maxpool_kernel_size: 2

maxpool_stride: 2

second_stage_box_predictor {

mask_rcnn_box_predictor {

use_dropout: false

dropout_keep_probability: 1.0

fc_hyperparams {

op: FC

regularizer {

l2_regularizer {

weight: 0.0

}

}

initializer {

variance_scaling_initializer {

factor: 1.0

uniform: true

mode: FAN_AVG

}

}

}

}

}

second_stage_post_processing {

batch_non_max_suppression {

score_threshold: 0.0

iou_threshold: 0.6

max_detections_per_class: 100

max_total_detections: 300

}

score_converter: SOFTMAX

}

second_stage_localization_loss_weight: 2.0

second_stage_classification_loss_weight: 1.0

}

}

train_config: {

batch_size: 1

optimizer {

momentum_optimizer: {

learning_rate: {

manual_step_learning_rate {

initial_learning_rate: 0.0003

schedule {

step: 0

learning_rate: .0003

}

schedule {

step: 900000

learning_rate: .00003

}

schedule {

step: 1200000

learning_rate: .000003

}

}

}

momentum_optimizer_value: 0.9

}

use_moving_average: false

}

gradient_clipping_by_norm: 10.0

fine_tune_checkpoint: "/home/icepoint/Downloads/faster_rcnn_resnet101_coco_2017_11_08/model.ckpt"

from_detection_checkpoint: true

# Note: The below line limits the training process to 200K steps, which we

# empirically found to be sufficient enough to train the pets dataset. This

# effectively bypasses the learning rate schedule (the learning rate will

# never decay). Remove the below line to train indefinitely.

num_steps: 200000

data_augmentation_options {

random_horizontal_flip {

}

}

}

train_input_reader: {

tf_record_input_reader {

input_path: "/home/icepoint/Downloads/datas/train.tfrecords"

}

label_map_path: "/home/icepoint/Downloads/datas/disk_label_map.pbtxt"

}

eval_config: {

num_examples: 2000

# Note: The below line limits the evaluation process to 10 evaluations.

# Remove the below line to evaluate indefinitely.

max_evals: 10

}

eval_input_reader: {

tf_record_input_reader {

input_path: "/home/icepoint/Downloads/datas/train.tfrecords"

}

label_map_path: "/home/icepoint/Downloads/datas/disk_label_map.pbtxt"

shuffle: false

num_readers: 1

}需要修改一下train_config: fine_tune_checkpoint為下載的coco資料集中model.ckpt檔案,num_steps迭代次數

需要修改train_input_reader:input_path表示輸入train.tfrecords的檔案路徑,label_map_path表示類別檔案路徑

需要修改eval_input_reader:input_path與label_map_path

訓練過程中可能比較耗時,或者耗費資源

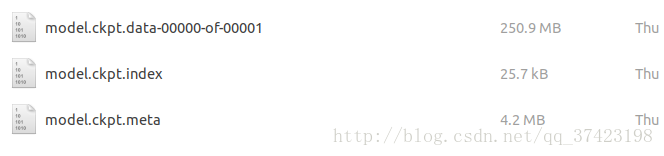

當自動儲存model.ckpt檔案時就可以終止訓練

訓練時訓練目錄下會有

export_inference_graph.py

轉換model.ckpt為pb檔案

首先需要把train_dir下的model.ckpt-xxx.*三個檔案+checkpoint檔案,複製到train_checkpoint_prefix目錄下

重新命名把model.ckpt-xxx的xxx去掉

修改checkpoint裡的路徑內容

/Downloads/datas/ckpt/

執行

python export_inference_graph \

--input_type image_tensor \

--pipeline_config_path /home/user/tensorflow/models/research/object_detection/samples/configs/faster_rcnn_resnet101_pets.config \

--trained_checkpoint_prefix /home/user/Downloads/datas/ckpt/model.ckpt \

--output_directory /home/user/Downloads/datas/ckpt/Note:The expected output would be in the directory

path/to/exported_model_directory (which is created if it does not exist)

with contents:

- graph.pbtxt

- model.ckpt.data-00000-of-00001

- model.ckpt.info

- model.ckpt.meta

- frozen_inference_graph.pb

+ saved_model (a directory)

注意執行時可能會報錯:

ValueError: Protocol message RewriterConfig has no "layout_optimizer" field.推測可能是tensorflow臨時commit的bug

解決:開啟object_detection/exporter.py,將layout_optimizer字樣修改為optimize_tensor_layout字樣(函式名)即可

匯出後會生成frozen_inference_graph.pb用於資料檢測

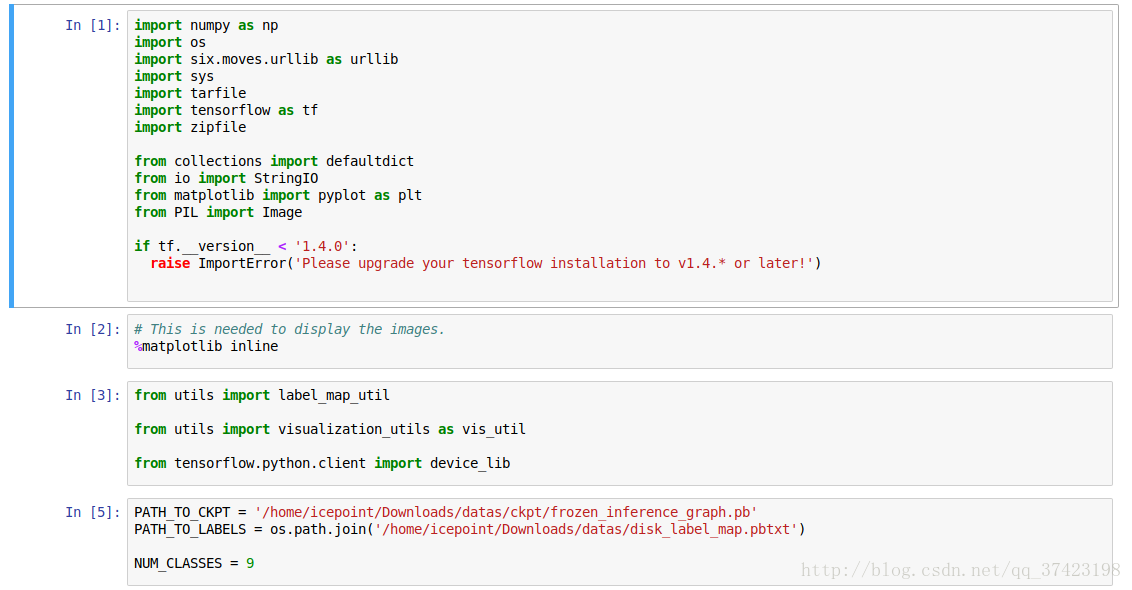

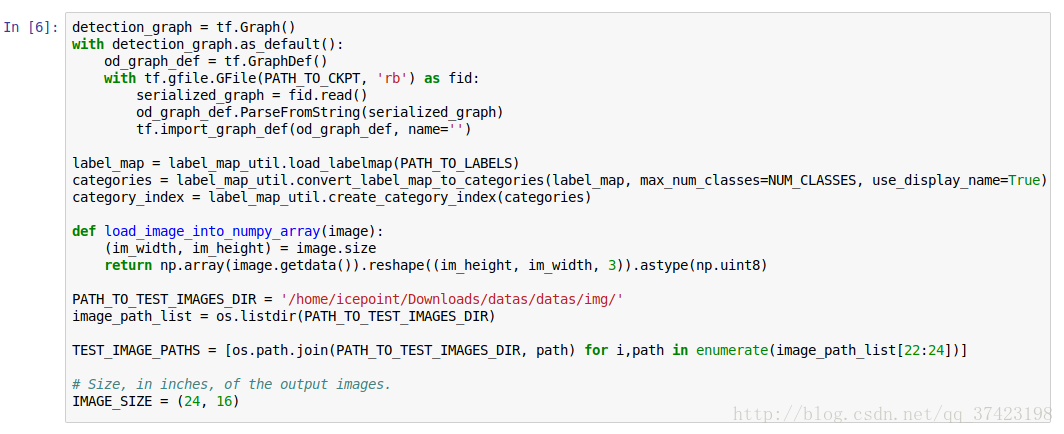

測試工作

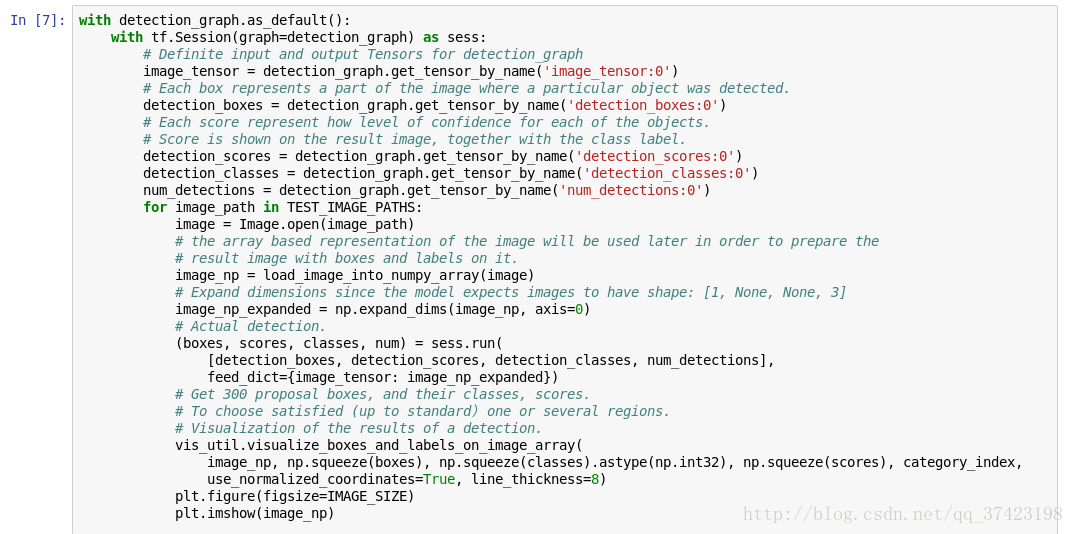

通過source, export PYTHONPATH開啟jupyter notebook

開啟object_detection_disk_dataset.ipynb

(具體參照object_detection/object_detection_tutorial.ipynb)

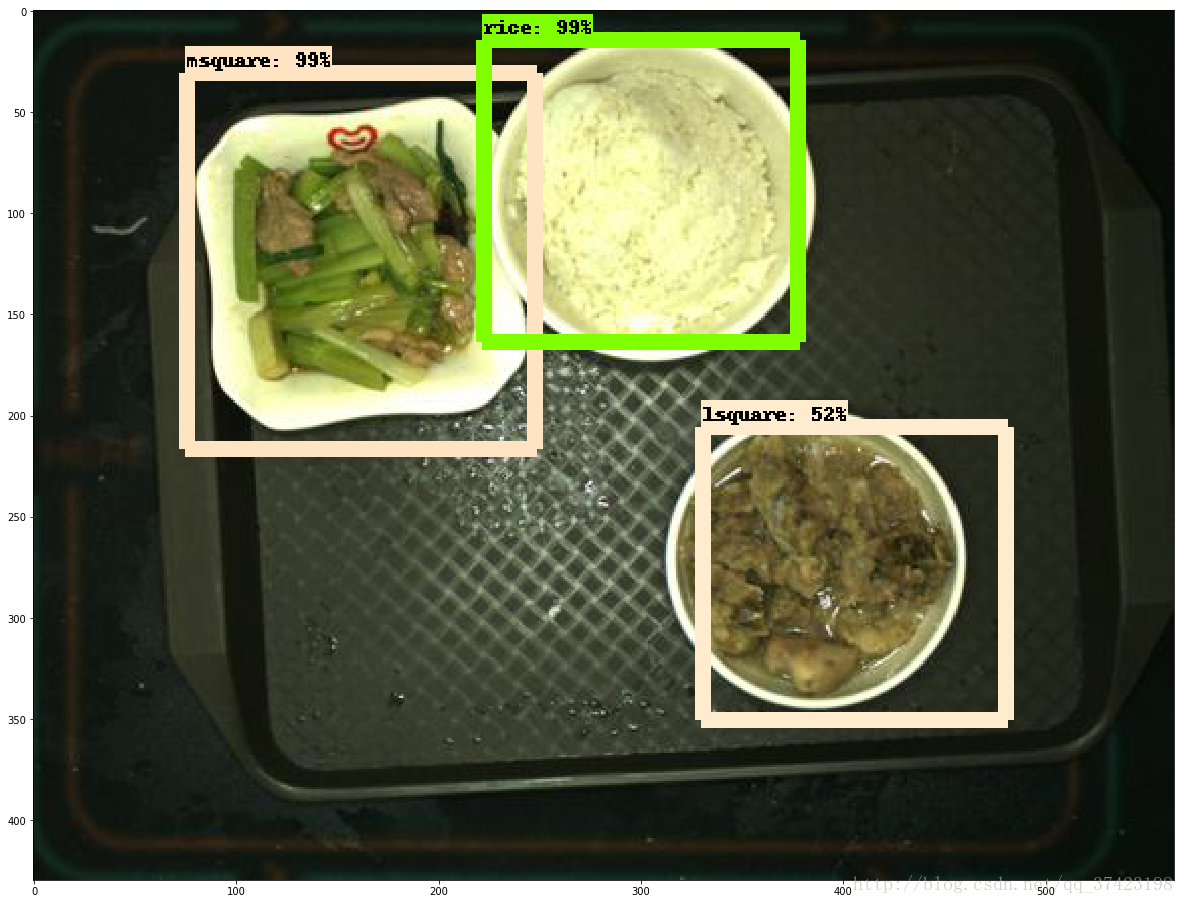

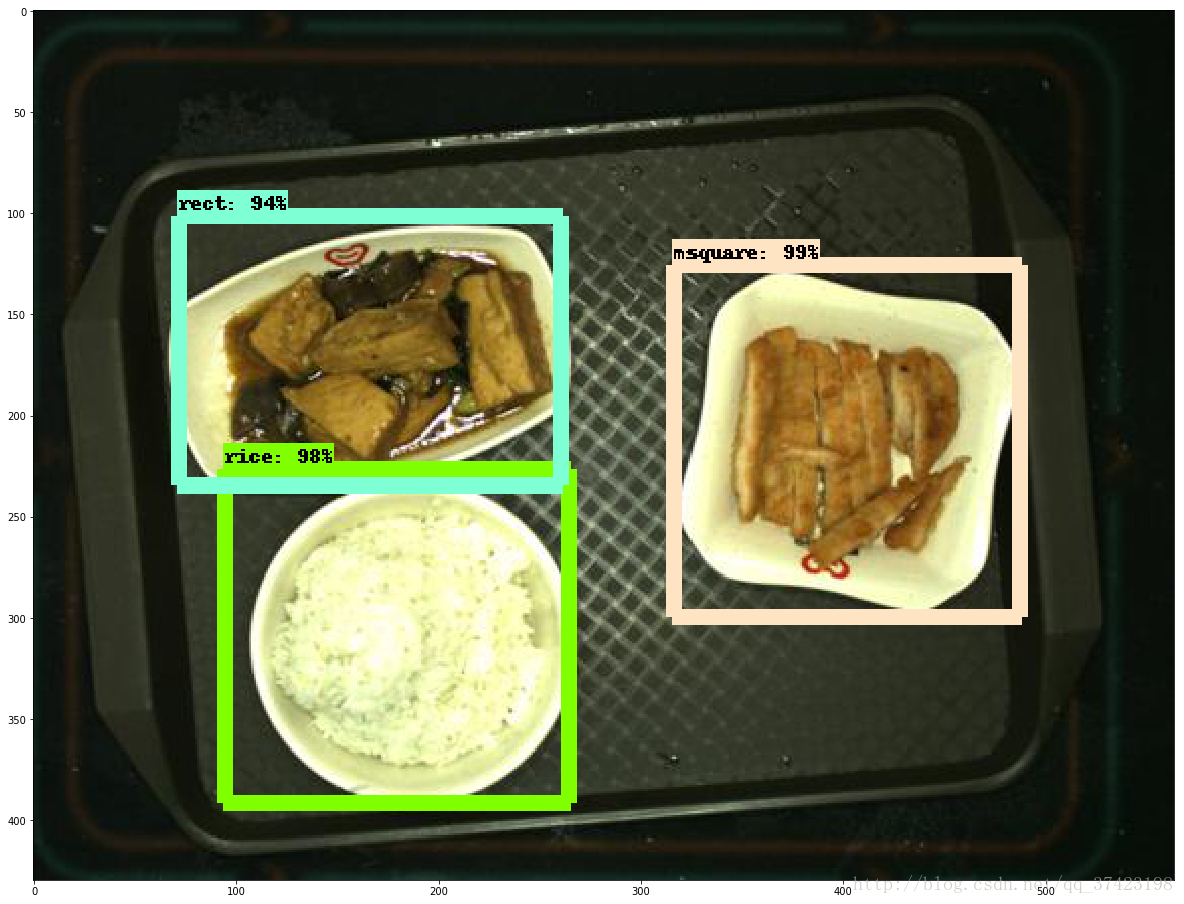

測試結果:

相關推薦

tensorflow專案學習(1)——訓練自己的資料集並進行物體檢測(object detection)

Tensorflow Object Detection 前言 本文主要介紹如何利用官方庫tensorflow/models/research/objection 並通過faster rcnn resnet 101(以及其他)深度學習框架

物體檢測Object Detection學習筆記(MXNet)(二)

錨框生成過多的問題 上一節學習到,我們是基於生成的錨框來預測物體類別和偏移量,而且我們對於一張原始圖片,對於每個畫素都會生成多個錨框。 問題:我們生成了大量的錨框,而在其中有大量的重複區域,造

tensorflow-Inception-v3模型訓練自己的資料程式碼示例

一、宣告 本程式碼非原創,源網址不詳,僅做學習參考。 二、程式碼 1 # -*- coding: utf-8 -*- 2 3 import glob # 返回一個包含有匹配檔案/目錄的陣列 4 import os.path 5 import rand

Fast RCNN 訓練自己資料集 (1編譯配置)

FastRCNN 訓練自己資料集 (1編譯配置) FastRCNN是Ross Girshick在RCNN的基礎上增加了Multi task training整個的訓練過程和測試過程比RCNN快了許多。別的一些細節不展開,過幾天會上傳Fast RCNN的論文筆記。FastRCNN mAP效能上略有上升。Fa

tensorflow實現FCN完成訓練自己標註的資料

一、先復現FCN 環境:Ubuntu18.04+tensorflow(我的) 1.下載程式碼: 論文地址:https://arxiv.org/pdf/1605.06211v1.pdf 論文視訊地址:http://techtalks.tv/talks

如何從頭到尾的用caffe-ssd訓練自己的資料集並進行目標識別

from __future__ import print_function import caffe from caffe.model_libs import * from google.protobuf import text_format import math import os i

tensorflow學習之訓練自己的CNN模型(簡單二分類)

本文借鑑已有cat-vs-dog模型,在此模型上進行修改。該模型可在以下網址下載,後續將對模型進行解析及進一步修改。https://download.csdn.net/download/twinkle_star1314/10414568。今天先對模型進行分析:一、模

Caffe-Windows訓練自己資料 + 遷移學習

一:目的 用配置好的Windows版本Caffe(no GPU),使用caffe自帶的ImageNet網路結構進行訓練和測試。訓練自己的資料; 用caffe團隊採用imagenet圖片進行訓練的引數結果,進行遷移學習; 二:訓練與測試 1. 資料集下載與處理 (

Tensorflow製作並用CNN訓練自己的資料集

本人初學Tensorflow,在學習完用MNIST資料集訓練簡單的MLP、自編碼器、CNN後,想著自己能不能做一個數據集,並用卷積神經網路訓練,所以在網上查了一下資料,發現可以使用標準的TFrecords格式。但是,遇到了問題,製作好的TFrecords的資料集,執行的時候報

使用deeplabv3+訓練自己資料集(遷移學習)

# 概述 在前邊一篇文章,我們講了如何復現論文程式碼,使用pascal voc 2012資料集進行訓練和驗證,具體內容可以參考[《deeplab v3+在pascal_voc 2012資料集上進行訓練》](https://www.vcjmhg.top/train-deeplabv3-puls-with-pa

知識表示學習1多元關係資料翻譯嵌入2知識表示學習關係路徑建模(公號回覆“知識表示學習”下載彩標PDF典藏版資料)

知識表示學習1多元關係資料翻譯嵌入2知識表示學習關係路徑建模(公號回覆“知識表示學習”下載彩標PDF典藏版資料) 原創: 秦隴紀 資料簡化DataSimp 今天 資料簡化DataSimp導讀:醫學AI讀書會兩篇論文:[1]Bordes A, Usunier N, Garcia-Dur

使用pytorch版faster-rcnn訓練自己資料集

使用pytorch版faster-rcnn訓練自己資料集 引言 faster-rcnn pytorch程式碼下載 訓練自己資料集 接下來工作 參考文獻 引言 最近在復現目標檢測程式碼(師兄強烈推薦F

tensorflow RNN 學習1,入門

終於,我可以開始寫我的學習記錄了。度過了懵比時期,從啥都不知道,變成知道了一些些,很開心。 現在記錄一下,自己寫的一個簡單的RNN例子,自我總價,加深理解。 因為自己學的不深,為了避免誤導,這裡不做下定義,僅描述。詳細可以參考其他文章。 本文的目的:能夠使用Tensor

《錯誤手記-01》 facenet使用預訓練模型fine-tune重新訓練自己資料集報錯

環境資訊:windows10+python3.5+tensorflow1.6.0 問題描述: 在自己的訓練集上跑train_softmax.py. 引數: --logs_base_dir F:/work/runspace/log/ --models_base_

Kaldi中thchs30訓練自己資料集的步驟

一、資料準備過程 網上下載的thchs-openslr資料集需要換成自己的資料集,包含兩個資料夾:data_thchs30和resource。下面講解如何搞定這一部分。 資料集在data_thchs30檔案中,包含四個部分(data、train、dev、test)。 data資料夾中包

TF之DCGAN:基於TF利用DCGAN測試自己的資料集並進行生成過程全記錄

訓練的資料集部分圖片 以從網上收集了許多日式動畫為例 訓練過程全記錄 開始訓練…… {'batch_size': <absl.flags._flag.Flag object at 0x000002C943CD16A0>, 'beta1':

機器學習1-概述及資料預處理

文章目錄 概述 機器學習 為什麼需要機器學習? 機器學習的型別 機器學習流程 資料預處理 均值移除(標準化) 範圍縮放 歸一化 二值化

ssm專案學習1-環境搭建

1.建立一個maven工程 開發工具eclipse:建立maven專案 填寫上面建立專案的資訊 如果建立maven專案時出錯:沒有web.xml檔案 暫時不要管-----下面過程中會解決 1. java程式碼放在src/main/java下

yolov3訓練自己資料集可參考文章

參考部落格原址: https://blog.csdn.net/u012966194/article/details/80004647 這篇文章將介紹編譯darknet框架開始,到整理資料集,到用yolo網路實現一個內部資料集中號碼簿的定

Yolov3訓練自己資料集+資料分析

訓練自己到資料集已經在上一篇文中說明過了,這一篇著重記錄一下資料分析過程 資料分析 1. mAP值計算 1)訓練完成後,執行darknet官方程式碼中到 detector valid 命令,生成對測試集到檢測結果,命令如下: ./darknet detector va