Yolov3之darknet下訓練與預測

阿新 • • 發佈:2019-01-09

一、製作資料集

二、修改配置檔案

本文以人手檢測為例配置(只有一個label:hand)

1、新增或修改data/hand.names檔案,此檔案記錄label,每行一個label(注意:對應於訓練資料的labels)

2、新增或修改cfg/hand.data檔案

classes= 1 # 自己資料集的類別數(不包含背景類)

train = /home/xxx/darknet/train.txt # train檔案的路徑

valid = /home/xxx/darknet/test.txt # test檔案的路徑

names = /home/xxx/darknet/data/hand.names 3、修改cfg/yolov3.cfg檔案

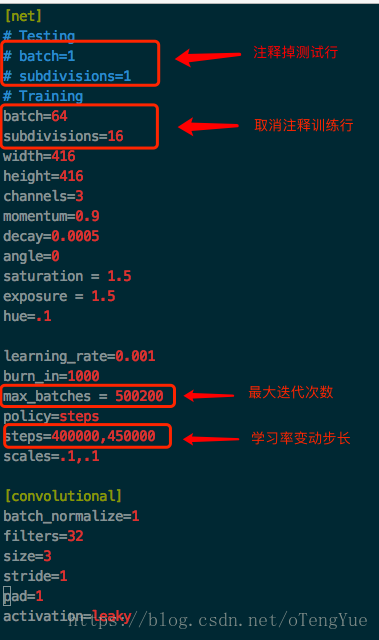

(1)基本修改

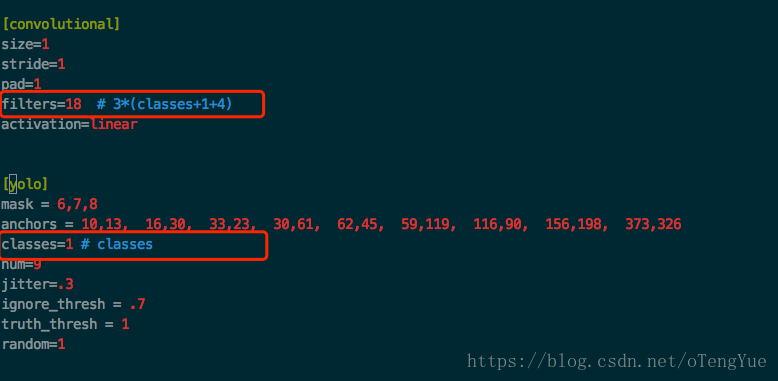

(2)修改3處,直接根據“yolo”關鍵字查詢定位,然後根據註釋修改

三、訓練

下載預訓練模型放在models資料夾下

終端訪問dartnet目錄,輸入一下命令:

# 從頭開始訓練

./darknet detector train cfg/hand.data cfg/yolov3_hand.cfg models/darknet53.conv.74

# 從某個權重快照繼續訓練

./darknet detector train cfg/hand.data 四、訓練日誌視覺化

主要根據日誌檔案生成loss和iou曲線,當然日誌需要訓練時候從定向來生成日誌檔案

五、預測過程

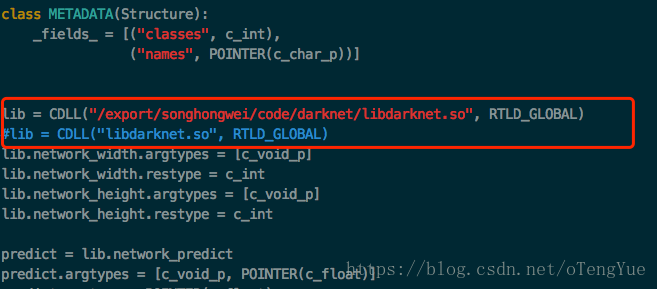

由於github上的master版本的python/darknet.py針對yolov3版本目前不太完善(截止2018-08-02),如果darknet採用master分支編譯的話,建議把yolov3分支的python/darknet.py替換掉master版本的該檔案。同時需要更改該指令碼中的libdarknet.so路徑為本機編譯後的路徑,不修改可能引起報錯

此處記錄備份一下當前版本的darknet.py檔案

from ctypes import *

import math

import random

def sample(probs):

s = sum(probs)

probs = [a/s for a in probs]

r = random.uniform(0, 1)

for i in range(len(probs)):

r = r - probs[i]

if r <= 0:

return i

return len(probs)-1

def c_array(ctype, values):

new_values = values.ctypes.data_as(POINTER(ctype))

return new_values

def array_to_image(arr):

import numpy as np

# need to return old values to avoid python freeing memory

arr = arr.transpose(2,0,1)

c = arr.shape[0]

h = arr.shape[1]

w = arr.shape[2]

arr = np.ascontiguousarray(arr.flat, dtype=np.float32) / 255.0

data = arr.ctypes.data_as(POINTER(c_float))

im = IMAGE(w,h,c,data)

return im, arr

class BOX(Structure):

_fields_ = [("x", c_float),

("y", c_float),

("w", c_float),

("h", c_float)]

class DETECTION(Structure):

_fields_ = [("bbox", BOX),

("classes", c_int),

("prob", POINTER(c_float)),

("mask", POINTER(c_float)),

("objectness", c_float),

("sort_class", c_int)]

class IMAGE(Structure):

_fields_ = [("w", c_int),

("h", c_int),

("c", c_int),

("data", POINTER(c_float))]

class METADATA(Structure):

_fields_ = [("classes", c_int),

("names", POINTER(c_char_p))]

#lib = CDLL("/home/pjreddie/documents/darknet/libdarknet.so", RTLD_GLOBAL)

lib = CDLL("libdarknet.so", RTLD_GLOBAL)

lib.network_width.argtypes = [c_void_p]

lib.network_width.restype = c_int

lib.network_height.argtypes = [c_void_p]

lib.network_height.restype = c_int

predict = lib.network_predict

predict.argtypes = [c_void_p, POINTER(c_float)]

predict.restype = POINTER(c_float)

set_gpu = lib.cuda_set_device

set_gpu.argtypes = [c_int]

make_image = lib.make_image

make_image.argtypes = [c_int, c_int, c_int]

make_image.restype = IMAGE

get_network_boxes = lib.get_network_boxes

get_network_boxes.argtypes = [c_void_p, c_int, c_int, c_float, c_float, POINTER(c_int), c_int, POINTER(c_int)]

get_network_boxes.restype = POINTER(DETECTION)

make_network_boxes = lib.make_network_boxes

make_network_boxes.argtypes = [c_void_p]

make_network_boxes.restype = POINTER(DETECTION)

free_detections = lib.free_detections

free_detections.argtypes = [POINTER(DETECTION), c_int]

free_ptrs = lib.free_ptrs

free_ptrs.argtypes = [POINTER(c_void_p), c_int]

network_predict = lib.network_predict

network_predict.argtypes = [c_void_p, POINTER(c_float)]

reset_rnn = lib.reset_rnn

reset_rnn.argtypes = [c_void_p]

load_net = lib.load_network

load_net.argtypes = [c_char_p, c_char_p, c_int]

load_net.restype = c_void_p

do_nms_obj = lib.do_nms_obj

do_nms_obj.argtypes = [POINTER(DETECTION), c_int, c_int, c_float]

do_nms_sort = lib.do_nms_sort

do_nms_sort.argtypes = [POINTER(DETECTION), c_int, c_int, c_float]

free_image = lib.free_image

free_image.argtypes = [IMAGE]

letterbox_image = lib.letterbox_image

letterbox_image.argtypes = [IMAGE, c_int, c_int]

letterbox_image.restype = IMAGE

load_meta = lib.get_metadata

lib.get_metadata.argtypes = [c_char_p]

lib.get_metadata.restype = METADATA

load_image = lib.load_image_color

load_image.argtypes = [c_char_p, c_int, c_int]

load_image.restype = IMAGE

rgbgr_image = lib.rgbgr_image

rgbgr_image.argtypes = [IMAGE]

predict_image = lib.network_predict_image

predict_image.argtypes = [c_void_p, IMAGE]

predict_image.restype = POINTER(c_float)

def classify(net, meta, im):

out = predict_image(net, im)

res = []

for i in range(meta.classes):

res.append((meta.names[i], out[i]))

res = sorted(res, key=lambda x: -x[1])

return res

def detect(net, meta, image, thresh=.5, hier_thresh=.5, nms=.45):

im = load_image(image, 0, 0)

num = c_int(0)

pnum = pointer(num)

predict_image(net, im)

dets = get_network_boxes(net, im.w, im.h, thresh, hier_thresh, None, 0, pnum)

num = pnum[0]

if (nms): do_nms_obj(dets, num, meta.classes, nms);

res = []

for j in range(num):

for i in range(meta.classes):

if dets[j].prob[i] > 0:

b = dets[j].bbox

res.append((meta.names[i], dets[j].prob[i], (b.x, b.y, b.w, b.h)))

res = sorted(res, key=lambda x: -x[1])

free_image(im)

free_detections(dets, num)

return res

def detect_numpy(net, meta, image, thresh=.5, hier_thresh=.5, nms=.45):

im, arr = array_to_image(image)

num = c_int(0)

pnum = pointer(num)

predict_image(net, im)

dets = get_network_boxes(net, im.w, im.h, thresh, hier_thresh, None, 0, pnum)

num = pnum[0]

if (nms): do_nms_obj(dets, num, meta.classes, nms);

res = []

for j in range(num):

for i in range(meta.classes):

if dets[j].prob[i] > 0:

b = dets[j].bbox

res.append((meta.names[i], dets[j].prob[i], (b.x, b.y, b.w, b.h)))

res = sorted(res, key=lambda x: -x[1])

free_detections(dets, num)

return res

if __name__ == "__main__":

#net = load_net("cfg/densenet201.cfg", "/home/pjreddie/trained/densenet201.weights", 0)

#im = load_image("data/wolf.jpg", 0, 0)

#meta = load_meta("cfg/imagenet1k.data")

#r = classify(net, meta, im)

#print r[:10]

net = load_net("cfg/tiny-yolo.cfg", "tiny-yolo.weights", 0)

meta = load_meta("cfg/coco.data")

import scipy.misc

import time

'''

t_start = time.time()

for ii in range(100):

r = detect(net, meta, 'data/dog.jpg')

print(time.time() - t_start)

print(r)

image = scipy.misc.imread('data/dog.jpg')

for ii in range(100):

scipy.misc.imsave('/tmp/image.jpg', image)

r = detect(net, meta, '/tmp/image.jpg')

print(time.time() - t_start)

print(r)

'''

image = scipy.misc.imread('data/dog.jpg')

t_start = time.time()

for ii in range(100):

r = detect_numpy(net, meta, image)

print(time.time() - t_start)

print(r)

人手檢測預測程式碼:examples/yolov3_detector_hand.py

#coding=utf-8

import cv2

import sys, os

sys.path.append('/export/songhongwei/code/darknet/python/')

import scipy.misc

import darknet as dn

from PIL import Image

class Yolov3HandDetector:

hand_cfg_path = "/export/songhongwei/code/darknet/cfg/yolov3_hand.cfg"

hand_weights_path = "/export/songhongwei/code/darknet/backup/yolov3_hand_150000.weights"

hand_data_path = "/export/songhongwei/code/darknet/cfg/hand.data"

def __init__(self):

# Darknet

self.net = dn.load_net(self.hand_cfg_path, self.hand_weights_path, 0)

self.meta = dn.load_meta(self.hand_data_path)

def img_cv2pil(self, cv_im):

pil_im = Image.fromarray(cv2.cvtColor(cv_im, cv2.COLOR_BGR2RGB))

return pil_im

def detect_hand(self,cv_im):

im = self.img_cv2pil(cv_im)

im = scipy.misc.fromimage(im)

res_infos = dn.detect_numpy(self.net, self.meta, im)

bbox_map = {}

for label, probability, bbox in res_infos:

if label not in bbox_map:

bbox_map[label] = []

bbox_map[label].append([int(bbox[0]-bbox[2]/2),int(bbox[1]-bbox[3]/2),int(bbox[0]+bbox[2]/2),int(bbox[1]+bbox[3]/2)])

return bbox_map

if __name__ == '__main__':

handDetector = Yolov3HandDetector()

pic_path = '/export/songhongwei/code/darknet/data/tmp_hand.jpg'

cv_im = cv2.imread(pic_path)

bbox_map = handDetector.detect_hand(cv_im)

print(bbox_map)輸出:

{'hand': [[155, 180, 218, 265], [239, 241, 302, 291]]}注:每個中括號內數字代表格式[top_left_x, top_left_y, bottom_right_x, bottom_right_y]