spark-shell啟動報錯:Yarn application has already ended! It might have been killed or unable to launch application master

阿新 • • 發佈:2017-08-10

name limits nor bsp closed pre opened 頁面 loading

spark-shell不支持yarn cluster,以yarn client方式啟動

spark-shell --master=yarn --deploy-mode=client

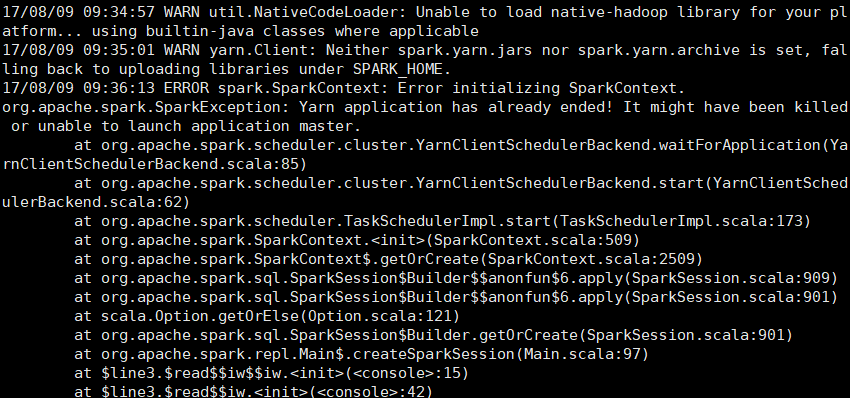

啟動日誌,錯誤信息如下

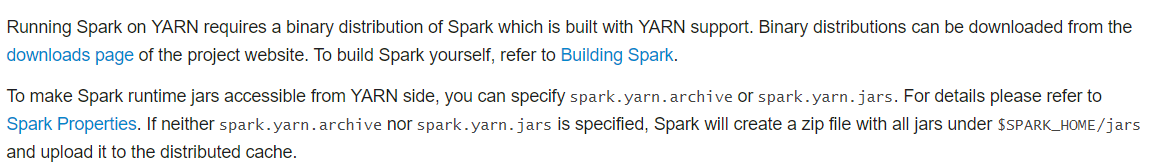

其中“Neither spark.yarn.jars nor spark.yarn.archive is set, falling back to uploading libraries under SPARK_HOME”,只是一個警告,官方的解釋如下:

大概是說:如果 spark.yarn.jars 和 spark.yarn.archive都沒配置,會把$SPAR_HOME/jars下面所有jar打包成zip文件,上傳到每個工作分區,所以打包分發是自動完成的,沒配置這倆參數沒關系。

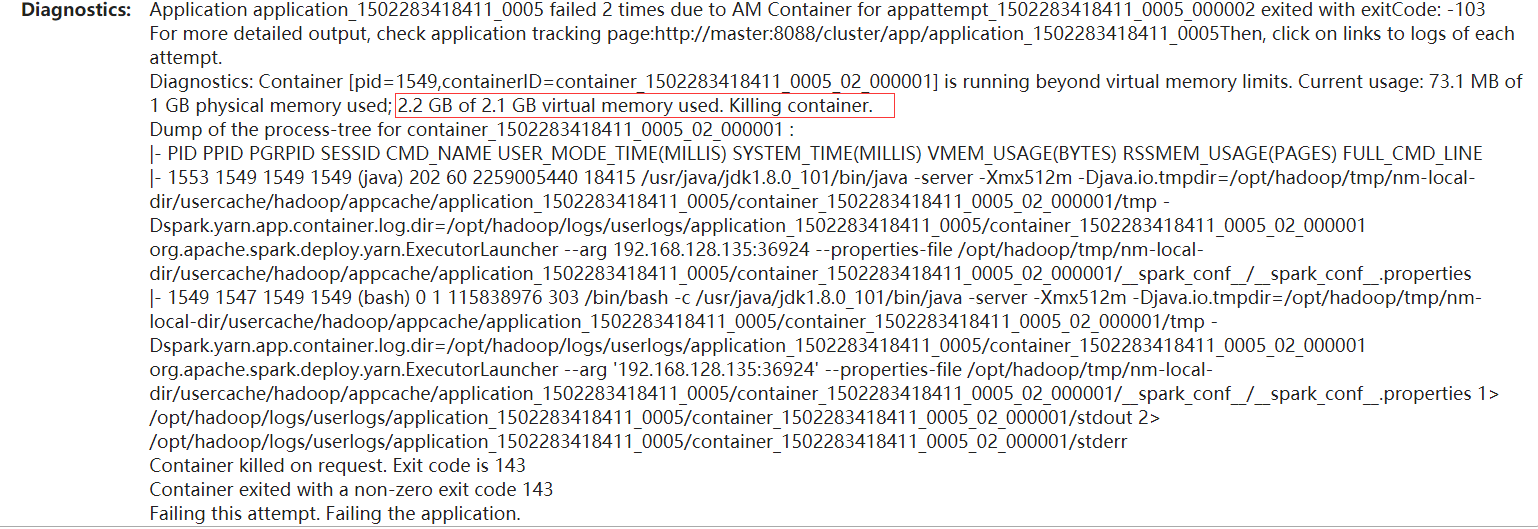

"Yarn application has already ended! It might have been killed or unable to launch application master",這個可是一個異常,打開mr管理頁面,我的是 http://192.168.128.130/8088 ,

重點在紅框處,2.2g的虛擬內存實際值,超過了2.1g的上限。也就是說虛擬內存超限,所以contrainer被幹掉了,活都是在容器幹的,容器被幹掉了,還玩個屁。

解決方案

yarn-site.xml 增加配置:

2個配置2選一即可

1 <!--以下為解決spark-shell 以yarn client模式運行報錯問題而增加的配置,估計spark-summit也會有這個問題。2個配置只用配置一個即可解決問題,當然都配置也沒問題--> 2View Code<!--虛擬內存設置是否生效,若實際虛擬內存大於設置值 ,spark 以client模式運行可能會報錯,"Yarn application has already ended! It might have been killed or unable to l"--> 3 <property> 4 <name>yarn.nodemanager.vmem-check-enabled</name> 5 <value>false</value> 6 <description>Whether virtual memory limits will be enforced forcontainers</description> 7 </property> 8 <!--配置虛擬內存/物理內存的值,默認為2.1,物理內存默認應該是1g,所以虛擬內存是2.1g--> 9 <property> 10 <name>yarn.nodemanager.vmem-pmem-ratio</name> 11 <value>4</value> 12 <description>Ratio between virtual memory to physical memory when setting memory limits for containers</description> 13 </property>

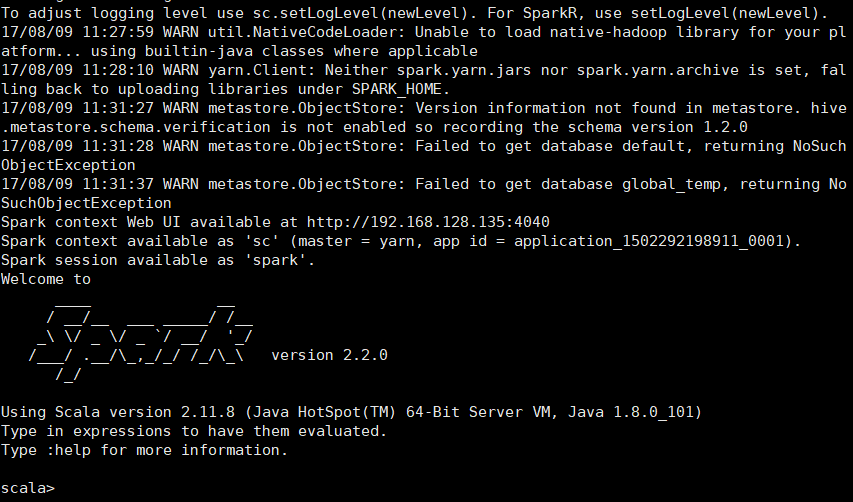

修改後,啟動hadoop,spark-shell.

spark-shell啟動報錯:Yarn application has already ended! It might have been killed or unable to launch application master