功能性模組:(7)檢測效能評估模組(precision,recall等)

阿新 • • 發佈:2021-01-13

技術標籤:功能性模組演算法python深度學習pytorch神經網路

功能性模組:(7)檢測效能評估模組

一、模組介紹

其實每個演算法的好壞都是有對應的評估標準的,如果你和老闆說檢測演算法好或者不好,哈哈哈,那必然就是悲劇了。好或者不好是一個定性的說法,對於實際演算法來說,到底怎麼樣演算法算好?怎麼樣演算法算不好?這些應該是有個定量的標準。對於檢測來說,可能最常用的幾個評價指標就是precision(查準率,就是你檢測出來的目標有多少是真的目標),recall(查全率,就是實際的目標你的演算法能檢測出來多少),還有ap,map等。本篇部落格其實就是讓小夥伴們對自己的檢測模型心裡有一個底,換句話說這個模型你訓練出來到底咋樣?

二、程式碼實現

import numpy as np

import os

def voc_ap(rec, prec, use_07_metric=False):

"""Compute VOC AP given precision and recall. If use_07_metric is true, uses

the VOC 07 11-point method (default:False).

"""

if use_07_metric:

# 11 point metric

ap = LZ就不詳細講程式碼了,註釋已經很詳細了,主要是你的gt應該是什麼樣子的呢?

- 命名標準:img_name.txt

- gt格式:

# x1 y1 x2 y2

965 209 1040 329

- res格式:

# score x1 y1 x2 y2

0.9999481 962 222 1043 331

0.9999091 635 251 747 412

0.9783503 1795 340 1836 402

0.57386667 1730 305 1748 337

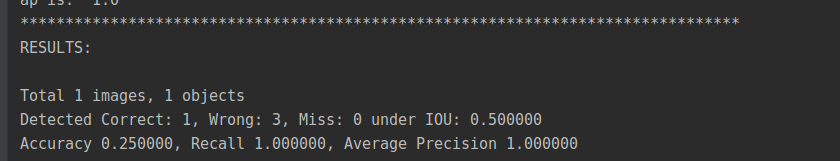

這個是結果展示,程式碼中LZ為了清晰加了非常多的列印,誰讓雲端儲存不穩定呢,動不動圖片就被損壞了,哭唧唧。。。

ps:最近疫情反彈的厲害,誰能想到新冠肺炎居然堅持了一年,國外疫情也是指數性增長,這算是人類的災難,也許多年後在看現在,又會有不一樣的體會。珍惜當下,愛惜生命!